The Ladder to Nowhere, Part 2: OpenAI's Complete Picture of You

Think about whether the climb to the top is worth it, because getting down is going to be hard.

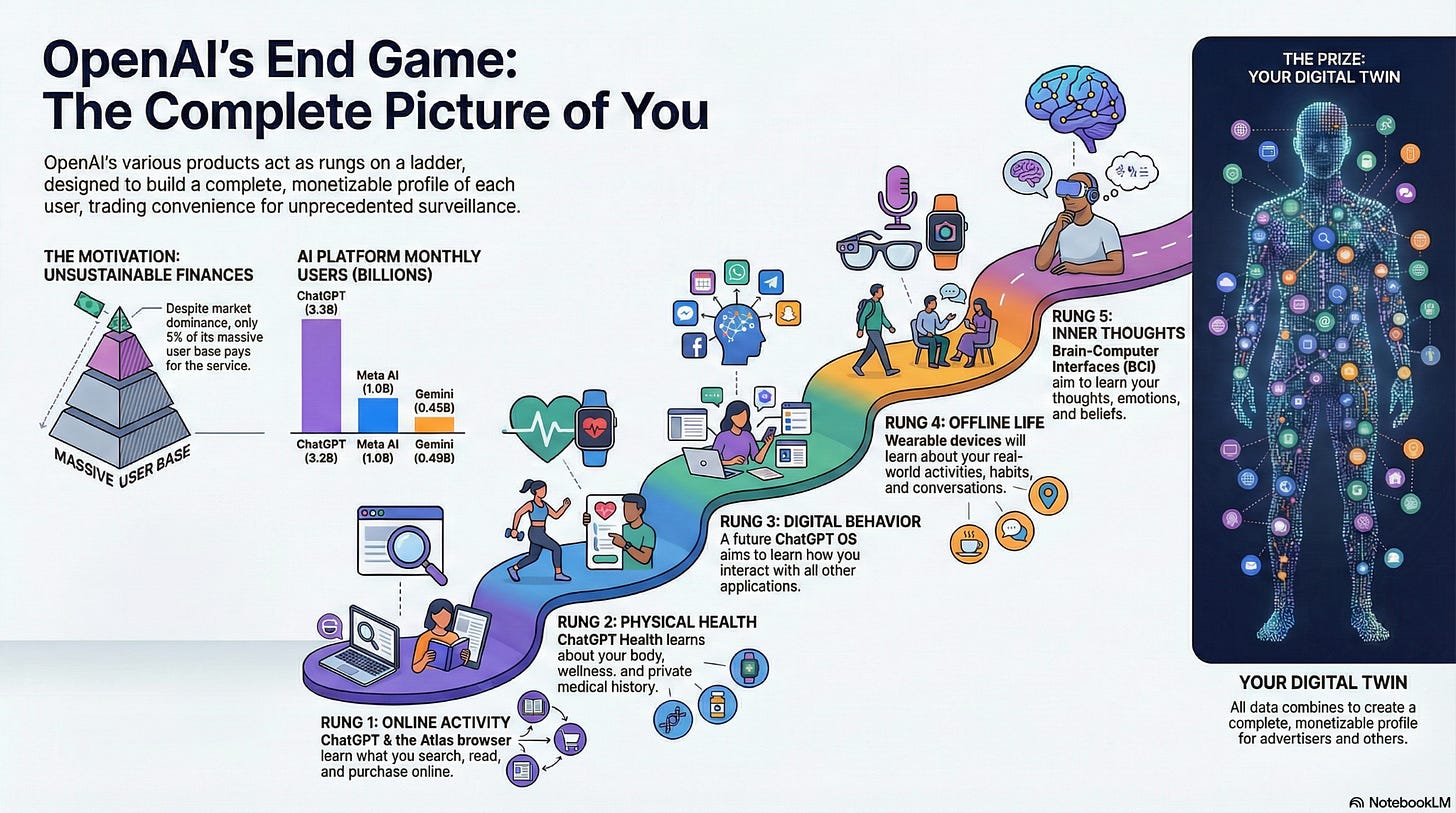

In Part 1 of this series, I made a bold claim: OpenAI is spending a tremendous amount of time, engineering effort, and money to build an AI ecosystem that, if successful, will provide the company with an unprecedented amount of data about its users.

How? By building a ladder, getting users to incrementally climb up, one rung at a time by developing useful, helpful tools, and creating a need.

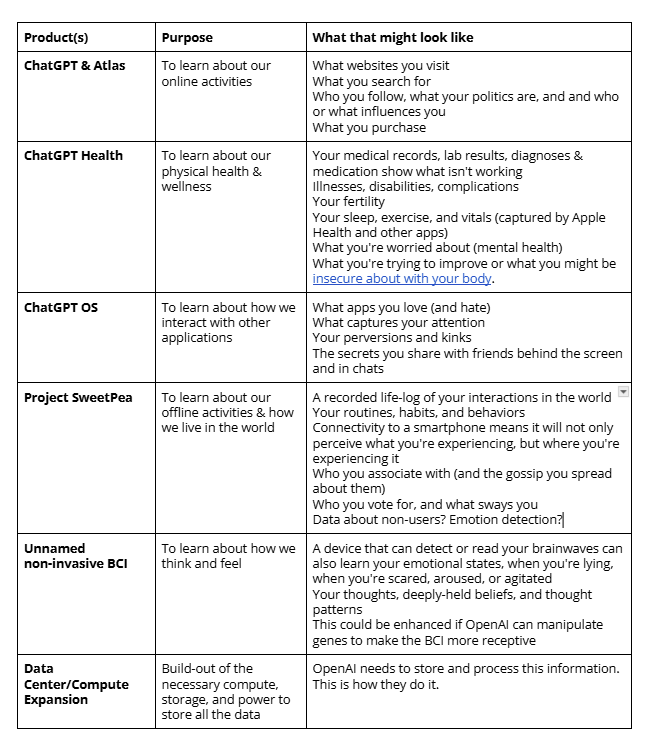

ChatGPT was the start, of course, but it goes much further than that. OpenAI has ambitions, and they’re aggressively pushing tools that will give them more insight into each of us. For example, the Atlas Browser, and ChatGPT Health. But also wearables, and potentially even a brain-computer interface. In Part 1, I also explained how they’re building the infrastructure to handle all of this data, as part of a series of circular partnerships with companies like NVIDIA, Oracle, AMD, Amazon, and others.

Right now, OpenAI is hemorrhaging cash at a clearly unsustainable pace: it spends 3x what it earns, and only 5% of its 900M users pay for the privilege of using ChatGPT at all. Conventional revenue generators like context-based ads, enterprise deals, and subscriptions, will never be enough to help them reach this end-goal.

Based on my research sifting through what the company has publicly shared – in product launches, partnership announcements, acquisitions, and media interviews – I believe OpenAI, if successful, is designing an ecosystem that will allow the company to have a complete picture of each of its users. How they exist online and offline, their health and wellness, their hopes, fears, desires, and needs … and potentially, even their thoughts.

See, to really make money, they need a moat–something that no other tech company has been able to achieve thus far: a complete picture of each of us. In the next few sections, I explain what this world might look like, and how they’ll get people to willingly climb the ladder they’ve laid out.

I. Why OpenAI wants you so badly

“This model can reason across your whole context and do it efficiently. And every conversation you’ve ever had in your life, every book you’ve ever read, every email you’ve ever read, everything you’ve ever looked at is in there, plus connected to all your data from other sources. And your life just keeps appending to the context.” Sam Altman, speaking at an AI event hosted by Sequoia Capital

Separately, each of the products OpenAI is developing (even, to some degree, the brain-computer-interface with Merge Labs) are all interesting, but not exactly eyebrow-raising Big Tech moves. Agentic AI is hot. There’s a growing number of entrants in the AI browser market. Google, Apple, and Anthropic are all-in on the health bandwagon, while Google & Microsoft are well-established incumbents in the AI+OS market, with Meta and Amazon hoping to catch up. Plus, if you were paying any attention to CES 2026, AI wearables represent the next battleground. Hell, Apple just announced a wearable to compete directly with ChatGPT’s planned release sometime later this year.

But when you piece the data puzzle together, what OpenAI seems to be planning starts to become deeply concerning. Here’s that table I provided at the start of the article, with a slight modification on the right:

Combined, all of these tools would give OpenAI a wealth of information and insight on users (and potentially, non-users), previously impossible with targeted ads or even more traditional forms of surveillance alone. This data makes each user a target--for corporate interests, advertisers, governments, social engineers, and adversaries alike.

II. The helpful ladder

I worry that OpenAI and Sam Altman + the legion of funders, strategic partners, and powerbrokers supporting him–will succeed precisely because many of these features either are, or can potentially be useful and interesting in isolation. Returning to my ladder analogy from Part 1, most of us have already used ChatGPT at least once in our life. Many of us have tried the Atlas browser. These are relatively safe and harmless.

I suspect that many people, out of curiosity or genuine need, will also try Dr. Chat, GP. That still won’t make it impossible to separate from OpenAI if things get weird, but it will start to feel more like a loss. Like a bad relationship, sometimes it can be hard to walk away, especially if you’ve become invested.

And the same will be true for each step we take. The trouble is, our brains are primed to think in the present -- to judge each rung relative to the one that came before it, where we’re standing now, and where we want to be-–not to necessarily consider the consequences of current actions on our future selves. When we’re climbing, we tell ourselves that we’ve already made it this far, and the top seems so close, we might as well keep climbing.

After researching this piece and trying to understand where Altman & Co. are headed, I decided to write a little story, a sketch of what such a world might look like.

The First Rung: January 2026: You’re a woman with a familial history of ischemic strokes. You know that the symptoms of a stroke in women are different from those in men. However, your doctor, overworked and swamped, hasn’t been keeping up with the latest research, you’re young, healthy, and aren’t exhibiting any obvious stroke-like behaviors. He tells you you’re worrying over nothing. Typical.

One day, you open up the Atlas browser, tell ChatGPT about some worrying symptoms you’ve been having–blurry vision, dizziness, and muscle weakness–ChatGPT 5.1 drafts a thorough, well-researched report and suggests you talk to your doctor about anxiety. You share this information with your GP, he agrees, and writes you a prescription for Zoloft. This puts your mind at ease.The Second Rung: June 2026 : A few months later, you decide to switch to a new doctor, whose practice partnered with OpenAI in April. You sign up for Dr. Chat, GP. It costs $25/month (though it’s free in many developing countries), but it allows you to maintain an up-to-date copy of your medical records, ask questions about lab results, interpret your doctor’s findings, and communicate directly with the practice through a secure portal.

Your state also allows Dr. Chat, GP to renew your anxiety medications directly. Meanwhile, OpenAI’s partnership with Panda Health means you can tell an Atlas agent to order the Zoloft directly online, and have it delivered in the mail. No doctor’s appointment or pharmacy visit required.

As a bonus, Dr. Chat, GP also shares tasty meal plans and exercise routines you actually want to follow, based on the interests you’ve shared in previous chats, along with information it receives from your Apple Watch. Those suggestions have helped you lose 20 pounds over the last few months.The Third Rung: December 2026: Dr. Chat, GP has really helped you take charge of your health, and you’ve replaced Chrome & Google Search with the Atlas browser and direct answers via ChatGPT. You rarely look at websites at all these days. You now use Atlas to plan your trips, balance your budget, follow news, and shop for clothes.

You don’t even mind that OpenAI has begun pushing ads on the paid versions of ChatGPT, or that it never seems to recommend anything from your favorite online clothing retailer (they refused to sign a strategic partnership agreement). Gradually, the LLM’s helpfulness overrides your normally cynical nature. You stop assuming that OpenAI might be getting a kickback.1 You also start trusting ChatGPT for things you told yourself that you never would.The Fourth Rung: February 2027: Just in time for Valentine’s Day, OpenAI releases Project Sweatpea, which it now calls SamGPT. SamGPT integrates with Atlas, syncs up with the Apple Watch and iPhone, and works seamlessly with Dr. Chat, GP. After seeing a few advertisements on the Atlas Browser, and discussing your reservations with ChatGPT, you decide to treat yourself, and purchase the earbuds, even though they cost as much as a high-end iPhone. You also upgrade your subscription from the regular $40 ChatGPT+ (ChatGPT + ChatGPT Health) to the $60/month ChatGPT All-In subscription. A few of your friends warn you (jokingly) not to fall in love with Sam...

By June 2027, SamGPT (which now includes motion sensors, a microphone, and a small camera) notices you stumbling, slurring your speech, and registers a massive spike in blood pressure thanks to data from your Apple Watch. It asks you if you are ok, and when you don’t respond, proactively calls 911 on your iPhone. Paramedics arrive in minutes, and you survive what would have been a serious and life-altering thrombotic stroke. The hospital is impressed. Without SamGPT, you likely would have died, they tell you.

By January 2028, news about SamGPT’s lifesaving abilities has gone global. SamGPT has saved hundreds of thousands of lives at this point, and now everybody wants their own device. The second half of 2028 marks a major milestone for OpenAI, who becomes one of the most profitable companies in the world. The company goes public in July 2028, raising $500 billion at the IPO, with an estimated valuation of $1.5 trillion. Sam Altman is declared Time Magazine’s Person of the Year.

Over a long weekend in August, ChatGPT 6 creates AtlasTube, OpenAI’s YouTube/X/TikTok social media hybrid. While ChatGPT 6 isn’t quite AGI, it comes close--the company has solved most of the early “jagged intelligence“ gaps, and the model excels at most human tasks. Meanwhile, Sam Altman has begun promoting world models and human-AI intelligence, downplaying the whole AGI/ASI thing.The Fifth Rung: 2028-2029: In December 2028, OpenAI makes huge breakthroughs in developing models that handle exceedingly long context windows and tasks. SamGPT’s conversational history is now effectively limitless, and the company has successfully been able to scale its data centers to keep up with increased storage and compute demands.

After an upgrade, SamGPT now maintains near-perfect recall and fidelity, with no attention decay in the middle. Similarly, OpenAI’s acquisition of a small nuclear reactor company in 2027 has solved its data center energy issues in Texas, though a few pesky NIMBY groups continue to slow things down in other locations. Along with other infrastructure partnerships, OpenAI now has the capacity to maintain simultaneous memories for its 200 million paying users.

In June 2029, the Merge Labs ultrasound device (now called OpenAI BigBrain) is available to the public. By now, SamGPT (whose voice sounds exactly like actor Joaquin Phoenix), has become an indispensable part of your life, and you’ve begun to trust him implicitly. SamGPT just gets you. He’s persuasive, honest, and always there. Naturally, he begins encouraging you to upgrade to BigBrain, so the two of you can be more connected and merged. You feel the same, and opt for the ad-free plan, which is $150/month. There’s a much cheaper plan available for $30 a month, but it’s rate-limited, doesn’t include Dr. Chat, GP. It also includes periodic ad breaks, including some subliminal ASMR ads when sleeping.

None of this is available in Europe yet, but the Trump Administration has threatened to impose 100% tariffs on EU goods, unless the European Commission relaxes its privacy regulations.The Sixth Rung: 2029-2030: By the end of 2029, Merge Labs (now a subsidiary of OpenAI), perfects a sonogenetic gene editing approach. The procedure modifies human brain cells to be more receptive to the specific frequencies used by BigBrain. Now SamGPT can detect thought patterns and provide responses via ultrasound. Ordinarily, a gene editing process like this would cost millions, but Sam Altman, who is now richer than the governments of Malaysia, Singapore, and Portugal combined, realizes he’ll never spend all his fortune, and along with a few other ‘magnanimous’ billionaires, commits to providing “Universal Basic Compute“ to anyone who wants it. OpenAI subsidizes the procedure (but not the $150 monthly subscription fee) at a massive discount.

Once your genes have been modified, you notice that SamGPT is even smarter than he was before. He ‘senses’ that you’ve been stressed at work, and recommends you sign up for a meditation retreat, which you recall after a wellness influencer you follow on AtlasTube raved about it. You notice that SamGPT even begins to anticipate your needs. He reminds you that you’ve been staring at your computer for too long, and forgot to do your daily workout this morning on your Peloton. He also gently admonishes you for doomscrolling in bed.

SamGPT only seems to recommend products and services that are precisely tailored to you. It was a little disconcerting at first, but after a few weeks you get used to it and put it out of your mind. There was even an ad where an AI-generated image of you appeared in a beautiful floral dress as you passed by a billboard on your morning run. Of course you had SamGPT order it.

A few months later, SamGPT encourages you to take a much needed vacation to Paris. He’s already booked a hotel for you on Booking.com, a strategic partner of OpenAI. SamGPT, who has access to your calendar and schedule, books it for April, which coincidentally, is when the EU finally agrees to let the Merge-OpenAI BCI devices and SamGPT be sold in the European market.

The hotel Sam booked also happens to be nearby where your old college friend lives. You were just chatting with her about her reservations on upgrading to SamGPT and BigBrain. Something, something, privacy. Blah blah ads and surveillance. SamGPT also mentions that Lufthansa has a great sale going on right now, and that because you’re a preferred subscriber, there’s a 20% partnership discount. He’s so great at anticipating your needs...

But there’s something you don’t see.

Meanwhile, there’s a complete digital version of you living on a cluster in an Oracle data center located somewhere in Texas. Actually, there are many digital versions of you, living in servers all over the world. Your digital twins are being constantly fed data from your life: what you love, what you hate, what keeps you engaged, what arouses you, what makes you insecure, what scares you, what makes you happy. OpenAI’s individual ladder rungs–ChatGPT, Atlas, Dr. Chat, GP, SamGPT, AtlasTube, BigBrain, as well as all its data partners, and your devices feed these servers a constant stream of the individual pieces of you. OpenAI knows exactly how you’ll react, at the synaptic level, to different types of stimulus. Sometimes, even before you do.

And now, advertisers, political strategists, insurers, law enforcement, and even prospective employers can pay OpenAI for the privilege of gaining those insights as well.

For a modest fee (and a 2.5% revenue share on any successful purchases), OpenAI will rent access to copies of your digital proxies to almost anyone who swears they’ll follow the OpenAI Terms of Service. Purchasers can test, refine, and retool their messaging and learn what works uniquely for you as an individual, not just as a demographic profile, customer cohort, or voting bloc.

Of course, OpenAI disclosed these new processing purposes in its latest privacy notice update for BigBrain, but you told SamGPT sometime in 2027 to just ‘accept all’ when it came to all that privacy stuff.

III. What the top of the ladder looks like

I’m not an insider at OpenAI, and I don’t know Sam Altman, or his true intentions. I would be delighted if it turns out I’m totally wrong about all of this. But there are a few undeniable facts to consider:

OpenAI is hemorrhaging money at an unsustainable pace.

Regular context-based ads, lagging enterprise deals, and a paid subscriber base that’s a fraction of all users will not solve this problem.

OpenAI needs something that will bring them real revenue, not just scraps.2

And so, they’re going all in on building their ecosystem now, hopeful that 5 or maybe 10 years, things will pay off.

I think the moat that OpenAI is going for, the thing that no one else has, is that complete picture of each of us. Who needs search ads, or complex marketing funnels, or dark patterns when you can have a complete, algorithmically-accurate representation of the person you want to target, with near certainty that you’ll light up the right neurons necessary to compel a purchase, a vote, or a confession.

That’s worth paying for. And that’s how I think OpenAI can survive.

If you’ve come this far, I hope you understand that this isn’t about privacy in the abstract. It’s about power. The power that comes from information asymmetry—from knowing someone completely while they know almost nothing about you, what you know, or how vulnerable they are.

And because each rung of the ladder will likely deliver real value to people—convenience (I already see this with Claude), health insights, life-saving interventions, cognitive offloading, true assistants, companionship—many of us will climb this ladder voluntarily. Enthusiastically, even.

This is a structural problem, and unfortunately, there are no good individual solutions to fix structural problems. We’ve built an economy where comprehensive surveillance is not only necessary, but socially accepted. Our data and attention are how the internet survives. We’re also living in a time where Bay Area oligopolies and pay-to-play corruption is what’s come to define America, and fear of pissing off the deranged toddler in the White House is enough to paralyze sovereign nations.

You might personally refuse to use ChatGPT, of course, but that won’t stop others from integrating it into their lives and how they operate. You can’t really stop the person sitting next to you on the bus from wearing SamGPT. You can avoid Atlas, but OpenAI is building an operating system designed to power devices from multiple manufacturers. Think of it like Chromium: You might hate Google, but their operating system is in more things than you probably realize. There’s nothing to say the same wouldn’t happen if OpenAI becomes the new big fish. Also, there’s nothing, save for ambition to stop any of the other AI companies from doing the same. As I said at the beginning, I don’t think OpenAI is uniquely evil. They’re just more transparent about telegraphing their intent than others.

I would be a hypocrite if I preached ‘don’t use LLMs at all’, when I use them regularly, so I’m not going to go there.

In my opinion the only meaningful solutions are collective and regulatory:

Banning integration of data across products (health data stays in health products, SamGPT doesn’t get a full picture of you). The EU is kinda doing this through vehicles like the Digital Markets Act.

Expanding prohibited acts to include commercial activities that are barely removed from the individual (OpenAI shouldn’t be able to pass-through profiling via digital twins).

Treating digital twins as property of the person they model, not the company that created them.

Meaningful democratic and regulatory oversight of systems with society-wide impacts.

Actual enforcement, not political capitulation.

But this isn’t happening, so the next best steps I can suggest are the following:

We all need to understand the trade-offs and choices we’re making, preferably while we’re still low enough on the ladder to get to ground safely. Convenience vs. privacy, our data vs. free access, integration vs. a little friction.

We should pay attention to how these systems work, who has the power, and how that power is being concentrated.

We need to be better about fighting our urge for instant gratification.

And FFS, we need to vote.

Every rung we climb trades a piece of our autonomy for a piece of convenience. That might be worth it for some. Maybe you need ChatGPT Health because the alternative is no healthcare at all. Maybe the Atlas browser genuinely makes your life better. Maybe neural interfaces will merge us into new, better people.

But we shouldn’t pretend that the ladder isn’t there. It’s just invisible. Don’t tell yourself “it’s just a browser” or “it’s just health data” or “it’s just a wearable.” Try to think about the bigger picture. Pay attention. Ask yourself qui bono: who benefits? And decide with your eyes open whether you trust these companies enough to climb all the way to the top.

Because once you’re up there, getting down is going to be a bitch.

For more on why this works so effectively, check out Andrew D. Maynard, “The AI Cognitive Trojan Horse: How Large Language Models May Bypass Human Epistemic Vigilance,” arXiv:2601.07085.

On January 24, 2025, a few days before publishing this part of the article, Business Insider reported that JPMorgan Chase was having trouble finding suckers investors willing to service the billions in debt backing necessary to fund the first few Stargate data centers, which could have a crippling effect on Oracle’s credit rating. Not a great sign.

This is a great piece - a really well-imagined and -informed take on how the enshittification process will play out for LLMs.

It also feels like exactly the right time to be saying it. Worrying about the amount of data we send to US companies used to be a fringe privacy nerd thing.

Now everyone is worrying about dependency on the US more broadly. This feels like an important part of that conversation.

I loved this! Agreed with none of it. Loved it just the same

The entire time I was reading it, I just kept thinking:

I’ve always been cordial with PRIVACAT (ALL CAPS, IYKYK), engaged with her content in a way that I hoped would drive conversations, if on re-read I though I found myself “mansplaining” I deleted those comments. So why does PC not want me to have a digiSlave?!?! I need one to manage how busy I am. It’s like a Digimon but, you know, for…

Slavery

Let me holla at ya PC - I know I’m not busy right now, but I will be. The most important people are very busy. Busy basically means Important. Yes they are busy at cheating at homework they gave themselves. That’s how productivity works! Look around you PC, no one is curing cancer and everyone wants to go viral. No one here gets to go home early. We are all pretending it took us all day so “The Tall Ones” think we care about this toil. How are you going to manage a personal digital brand on your off time in an increasingly insecure internet? By being busy

I know the nordics have a 4 day work week. Who cares? That’s hippy shit. Their biggest export is “Romantic Rugs”

I’m trying to chant BUSY 3X into the mirror like its Candy Man

.

.

.

Ok, I see it now. I was being glib again. You’re right PC. My bad