48 Laws, 25 Years Later: Law 27 -- Tech Hype Cycles are Actually Cults in Disguise

Or how Silicon Valley builds cultlike followings and why they work.

This post was inspired by reading Robert Greene’s 48 Laws of Power (abebooks | bol.com). I briefly wrote about Law 27 a few days ago in this Substack Note.

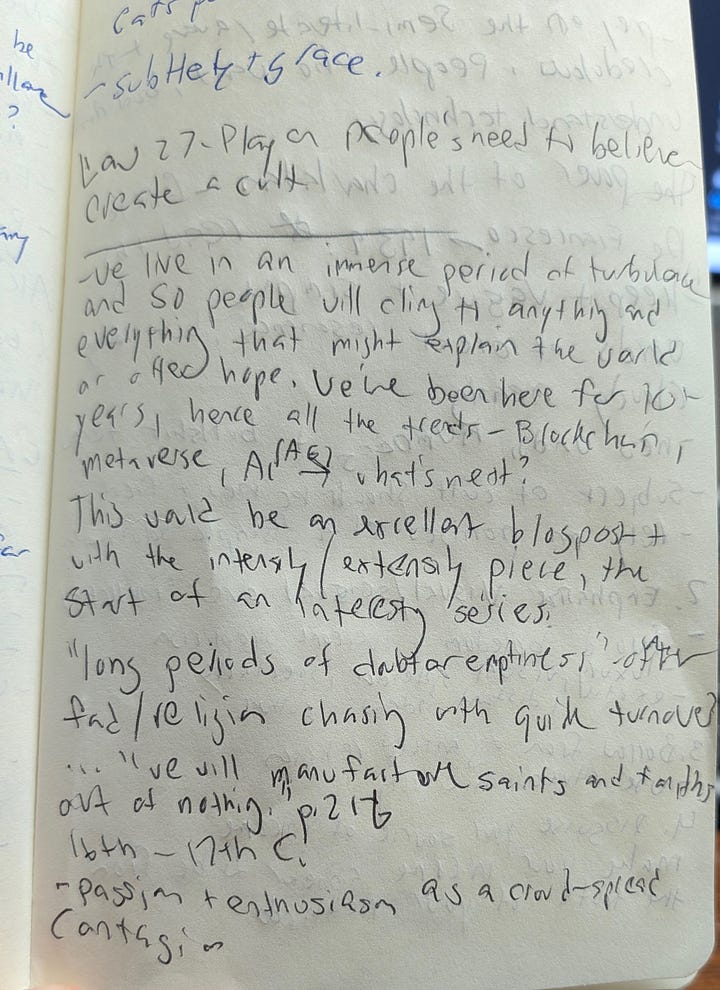

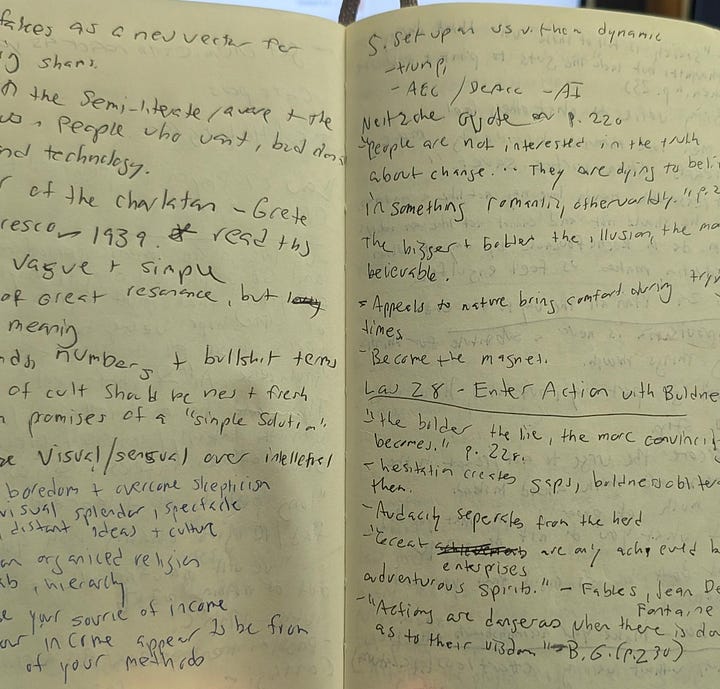

So I’m going to open with a confession: 95.3% of this post about how tech hype is just a cult in disguise came from my deeply strange, cynical brain, with the main thesis scribbled over three pages of nearly illegible handwritten notes.

But that last 4.7%? That was Claude.

Claude, an LLM created by Anthropic PBC, a Silicon Valley AI tech company, helped me write a blog post about how hype bubbles mirror cults, and how the most successful bubbles and cults follow Law 27 from the 48 Laws of Power. Claude looked at my notes, and magicked up some shockingly insightful observations. Step 3 and Step 5, for example, benefitted greatly from the random word-stringing-together machine that is Sonnet 4.5.

Anyway, with that off my chest, let’s get to the good stuff.

LAW 27 — PLAY ON PEOPLE’S NEED TO BELIEVE TO CREATE A CULTLIKE FOLLOWING

People have an overwhelming desire to believe in something. Become the focal point of such desire by offering them a cause, a new faith to follow. Keep your words vague but full of promise; emphasize enthusiasm over rationality and clear thinking. Give your new disciples rituals to perform, ask them to make sacrifices on your behalf. In the absence of organized religion and grand causes, your new belief system will bring you untold power (p. 215)

We hate living with uncertainty. Chaos and feelings of powerlessness freak us out, and times of great transformation cause many to look for any answer that can explain why everything feels like its on fire. For some people, this means latching on to forces (be they people or movements) that seem to explain the unexplainable, or at least provide some framework to respond to the chaos.

Unsurprisingly, these forces tend to pop up during times of major social, economic, or technical change, and so when there’s wars, revolutions (physical or institutional), or political strife, you’re more likely to see new faiths emerge, new doomsday cults eager to recruit, and an over-abundance of charlatans and grifters1.

For example:

The Age of Revolution (Late-18th - Early 19th Centuries) → Mesmerism / animal magnetism in Paris, and personality cults around Robespierre, Napoleon, and Simon Bolivar.

Response to Industrialization & Dawn of the 20th Century → A major rise in religious cults/spin-off faiths, e.g., The New Thought movement, Cargo Cults, Theosophical Society

Social Unrest in the 1960s & 70s → Doomsday/Millenarian cults, Jonestown/The People’s Temple, the Manson Family.

Climate Change/Political Uncertainty → Longtermism/effective altruism which redirect the focus from addressing current harms & threats to mitigating harm to distant “future people.”

But I’d argue that people are also more eager to follow fads, hype cycles, and big social movements. After all, as Greene points out, during times of turbulence, “we will manufacture saints and faiths out of nothing.” (p. 216) Techno cults aren’t really any different than the traditional kind, albeit most of them aren’t expecting the rapture, unless by rapture, we’re talking about AGI.

The 2008 Financial Crisis → Bitcoin/blockchain/defi evangelism, which offered a ‘solution’ to our distrust of financial institutions.

The Covid Pandemic → The Metaverse / NFTs had cultlike followings, and both offered an escape from lockdowns and physical isolation in the form of social connections.

Job Displacement & Loneliness → The belief that AI will either save us or destroy us all. There’s even been a huge rise in the number of AI cults and people who flock to AI for emotional support/counseling/friendship as human connections get harder to maintain.

The Cultlike Nature of Hype Cycles & Speculative Bubbles

In Law 27, Greene provides the necessary ingredients for building a successful cult. He specifically notes that there are five steps necessary to get your nascent following off the ground. All of which, I might add, coincidentally seem to line up nicely with tech hype cycles & bubbles.

Step 1. Keep it new, vague & simple

Success requires that cult leaders and hype evangelists use words of great resonance, but cloudy meaning. It’s best to avoid details with whatever it is you’re promising (does anyone even know what the metaverse was supposed to do, or why memecoins matter?). Use new or scientific/technical-sounding words, numbers & fancy-sounding terms of art (10x engineer, explainability, d/acc/e/acc.

Greene reminds us that “[p]eople are not interested in the truth about change … They are dying to believe in something romantic, otherworldly” (p. 221). The vaguer the words, and more fuzzy the story, the more opportunity for followers to project their own hopes and dreams on to the movement or trend.

Second, the subject of the cult should be new & fresh, and rely on big, bold promises offering simple solutions or radical life transformation. AI will take the toil out of life and stave off loneliness, the blockchain will liberate us from centralized finance, and make us all fabulously wealthy. Technology will make us super productive!

Tip: The bigger & bolder the illusion, the more believable it tends to be.

Finally, successful movements need timelines and deadlines that are flexible. Alchemists were constantly pushing dates back on when they’d turn all that lead into gold. And think of all the doomsday cults who have forecast the end-of-days, only to revisit the prophesied date when it comes and goes.

AGI, self-driving vehicles, and quantum computing have been ‘right around the corner’ for years … First it was 2020… then 2025… now 2030… The only difference between AGI and the rapture is VC funding—but more on that in Step 4.

Step 2. Emphasize the visual/sensual over the intellectual

Boredom and skepticism kill cults & movements. When people apply critical thinking to analyze a cult leader’s claims, they ask questions, and doubts emerge. And if there isn’t enough novelty and excitement going on, followers will follow the next shiny thing.

The best way to avoid either, Greene advises, is to constantly overwhelm followers with beauty, splendor, and pageantry. There’s a reason that model releases are more like movie premieres than software update, and why Google I/O and Apple’s Worldwide Developer Conference is akin to a religious pilgrimage for many.

Step 2 explains gamification. And why every social platform (including, sadly, Substack) has pivoted to short-form videos, reels, and ‘Live’ events. It’s why Sora and Veo are constantly talked about, and why deepfakes are used by fraudsters and politicians so effectively. Step 2 single-handedly explains why Zuckerberg spent billions of dollars to re-invent _Second Life_ for VR headsets, and why I bet that the next big hype cycle will be around wearable neuraltech.

Step 3. Borrow from organized religion

Greene advises the modern-day cult leader to take a page from organized religion and develop rituals to partake in, rules to follow, & hierarchies and systems for separating the die-hard believers from the casuals and lay followers. You might be asking yourself how this manifests, so let me provide an example.

When I worked for Palantir (and later, Meta), I wasn’t just an employee, I was a Palantirian. I didn’t just work for Meta, I was a Metamate. These terms made us special and different. We were the chosen few. It wasn’t just a job, but a calling.

We were out to change the world.

We had rituals, in the form of swanky company retreats, communions in the cafes, and weekly sermons in the form of all-hands meetings delivered by a messiah-like CEO. At least, that was the case for Palantir. Alex Karp really does have the charisma of a cult leader. Mark Zuckerberg, however, is still too much of a dweeb to ever be confused with any sort of spiritual leader.

I amassed dozens of symbols of the faith—t-shirts, hoodies, stickers, and swag from both companies. I wore my t-shirts with pride, like a Christian wears a cross.

And even though both companies tried to pretend that they were supporters of “flat hierarchies”, everybody knew it was a lie. Anyone paying even the slightest bit of attention knew who was living lavishly at the top of the pyramid, and who was meant to languish and toil at the bottom. As with any organized religion, the people who were most successful tended to coincidentally be those who were the true believers (or at least successful fakers).

I have no doubt in my mind that the likes of OpenAI, Anthropic, Google, FTX, Binance, and whatever tech companies come next also follow the precepts of step 3.

Step 4. Disguise your source of income (or the effectiveness of your solution)

Greene reminds us that the greatest cult leaders and charlatans are experts at creating the appearance of wealth founded on the certainty of their message, all while hiding where the money actually comes from: the cult’s followers (employees, customers, patrons) or the swindler’s victims. Think of the alchemists in the 16th and 17th centuries, or today’s Prosperity Gospel churches, or Sam Bankman-Fried & FTX.

Nobody, bar the promoters, make any real money from memecoins (Case in point: the Trump Coin has fallen over 90% in value since launch). Prediction markets like Kalshi and Polymarket are mostly going to make money for the hedge funds and early investors who paid in, not all the schlubs who are now treating what is in essence unregulated gambling as a solid investment strategy.

But even in cases where the income comes from “sophisticated investors” or seemingly legitimate sources like hedge funds and venture capital, eventually even the most hyped techno cults fall apart. OpenAI reached a market valuation of $500 billion in October, despite having an estimated operating loss of $7.8 billion in the first half of 2025, and projected data center rental costs of $620 billion a year, rising to $1.4 trillion by 2033, according to HSBC. AI capital expenditures are expected to exceed $500 billion in 2026 and 2027, despite American consumers only spending around $12 billion a year on AI services. Clearly, this won’t last.

Like the alchemists who promised to transmute lead to gold, AI companies (and before them, IoT, the blockchain, and the metaverse) have all promised to radically transform business, society, wealth distribution, and how we live our lives. And like the alchemists who had ‘one more experiment’ before success, the transformational change we’re all waiting for never actually arrives.

And that leads to the last step…

Step 5. Establish an us vs. them dynamic

When the above aren’t enough, cult leaders, religious figures, and scam artists can always fall back to the tried-and-true method of setting up an us vs. them / in-group vs. outgroup dynamic. Creating enemies is great, Greene notes, because it builds bonds and improves social cohesion, and importantly, it also allows the cult leaders to keep their hands clean by getting others to do the dirty work (Law #7).

Twitter is a cesspool (for many reasons) but it’s a perfect medium for nurturing pro/anti tribal warfare. There’s an us vs. them cult for everything:

E/acc vs. D/acc vs. Doomers

Effective Accelerationists (e/acc) argue that AI development should be “unstoppable,” with progress at all costs. AGI will save us all. Think of the Marc Andreessen / Beff Jezos types. Meanwhile, the decelerationists or “decels,” argue that we should go slowly (or not at all) on AI. They are concerned that AI will quite literally, destroy us all. The patron saint here is Eliezer Yudkowsky and many in the effective altruist movement.

Crypto Maximalists vs. No-coiners

The crypto maximalists are all about holding on for dear life (HODL), and like to tell opponents to “Have fun staying poor.” They mock the “No-coiners,” and “fiat cucks,” who think crypto is a scam or bubble.

But even in the world of data protection, there are in vs. out groups in the form of

Innovators vs. Regulators

The innovators are all about “Moving fast and breaking things,” encouraging floors not ceilings in terms of regulation, and “global innovation”, while the regulatory crowd is concerned with Big Tech overreach, surveillance capitalism, and favors a measured, cautious approach with clear rules and limits.

What’s most interesting here is that once a cult, techno or otherwise, builds up enough of a following, the cult leaders can step back and let the their supporters, critics, press, and even curious onlookers duke it out, which keeps everyone interested and allows the spectacle to take on a life of its own.

Am I Part of a Cult?

There’s something deeply ironic about using Claude—an AI tool from Anthropic, a company currently valued at over $60 billion—to write a piece about techno-cults, especially the cult-like aspects of the AI bubble.

I grappled with this dissonance during the process of writing this piece. Hell, I even argued with Claude about it.

Not because I believe that the LLM is my friend, or because I necessarily buy the hype, but because I recognize the value and comfort of the utility, notwithstanding how that usefulness is being contorted and exaggerated.

There are so many boom and bust cycles I could have included in this piece—the railroad boom of the mid- to late-19th century, the electricity boom in the early 20th century, and the Dotcom boom of the early 2000s. But those felt different, not because there weren’t grifters and conmen during those periods, but that didn’t mean electricity or railroads are scams. The internet has spawned countless bubbles, cults, and frauds, but email, search, and TCP/IP actually work all the same.

Greene’s five steps don’t describe the technology. They describe the *narrative* around the technology. And right now, the narrative around AI, like the narratives around other tech bubbles, has all the hallmarks he mentions in Law 27:

vague promises (AGI is coming)

constant spectacle (new model every month, multimodal, AI Agents)

religious rituals (all-hands sessions with visionary CEOs)

disguised income sources (VC billions despite massive losses)

tribal warfare (doomers vs. boomers).

Still, real capabilities exist—Claude helped me write this. LLMs can be useful tools. But useful tools don’t need cults. They just need honest assessments of what they can and cannot do.

“We will manufacture saints and faiths out of nothing,” Greene wrote. In 2025, we’re manufacturing them faster than ever. The question is whether we’ll recognize the pattern before the bubble pops, or whether we’ll just move on to manufacturing the next one.

Before folks freak out and call me a blasphemer, I am not equating organized religion with cults or charlatans so much as pointing out that they all target our strong need to believe, seek out the truth, and belong to … something. When tech fills that void, it’s not surprising it adopts religious structures. The question is whether tech deserves the faith religion commands.

The metaverse comparison hit hardest for me - I remember back in 2021 when everyone was convinced we'd all be living in VR by now. The "us vs them" dynamic you describe is spot on, especially watching the crypto maximalists vs no-coiners battles play out. It's honestly kinda scary how easily these patterns repeat themselves with each new tech wave. Really apreciate the self-awareness about using Claude to write this too!

I really enjoyed this, and makes me feel slightly better about using AI for my own work. And I am so curious what Claude said during your argument about the irony of using an LLM to write about techno-cults...