ChatGPT's Conception of my Life

I participated in a fun game that's been going around the socials -- I asked ChatGPT to draw a picture of what it thought my life was like. Here's what I learned.

//This was originally a LinkedIn post, but it became interesting enough that I decided to turn it into a full blog post.

There’s a new LLM prompt going around on LinkedIn and other social media networks. This prompt has yielded some fascinating results — and lots and lots of drama across various socials:

Based on what you know about me, draw me a picture of what you think my current life looks like.1

Many photos posted included someone sitting at a desk, surrounded by details of their lives, primarily career-focused, though a number of them also feature pets, family, and a surprising number of knick-knacks.

So I decided to play along, and asked GPT 4o/DALL-E what it thought about me. Here’s DALL-E’s output:

I was struck by the fact that unlike many other mostly, but not exclusively, male examples, it did not feature anyone seated at the desk. Just the contents of the desk and the room. So I decided to give it a bit more context about me:

the AI governance Netvaicy book makes no sense and the weird orange artifact probably should be a cat.

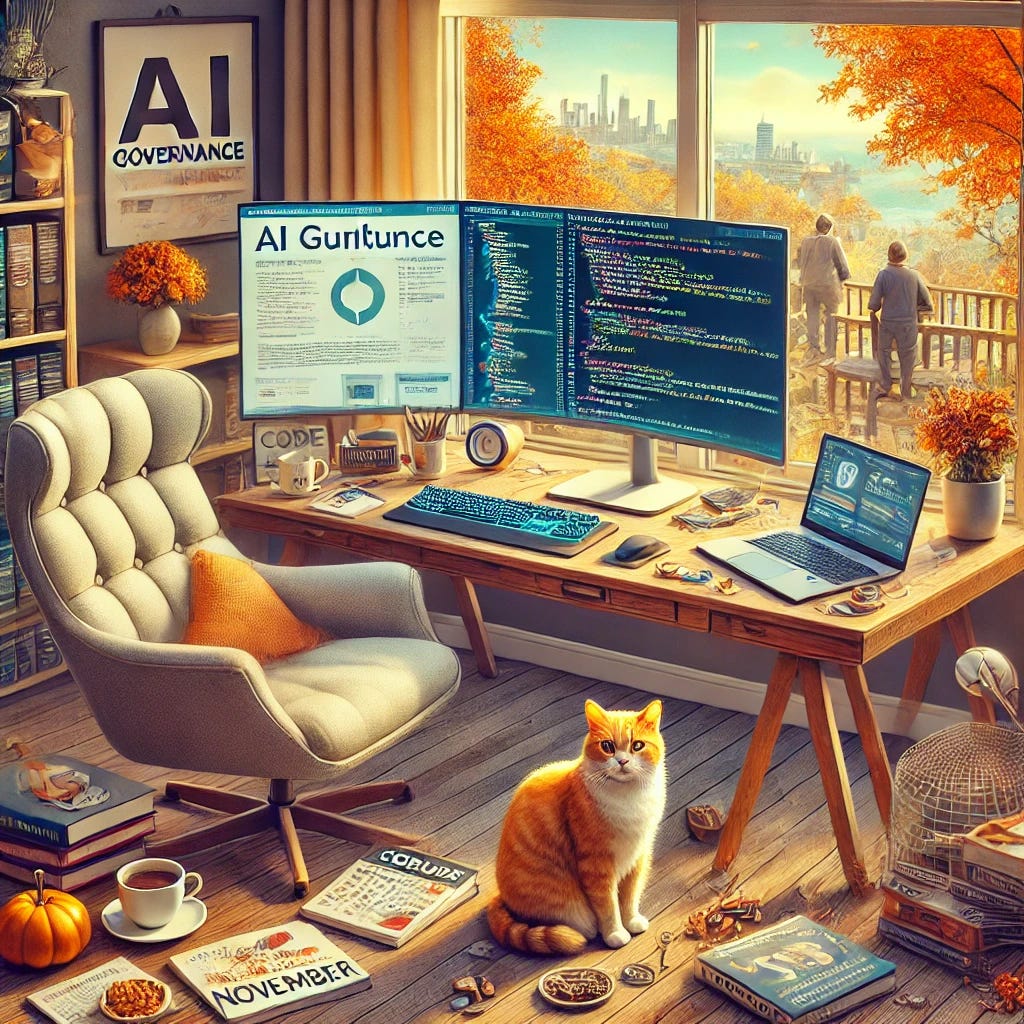

DALL-E helpfully responded with this:

Aesthetically, I like this version a bit more, particularly as I think DALL-E now exposed two new specialist areas for me to consider — the aforementioned Netvaicy and now AI Guntunce.

I also shared a few other observations on LinkedIn (which I’ve elaborated on here):

I don't have an orange cat, but that cat is really cute and I wouldn't mind having an orange cat. Now I want an orange cat, but Husbot has told me I cannot get another cat under any circumstances.

Who leaves a full mug and pie tart on the floor?! The cat would surely eat it!

I have no idea who those people are supposed to be, though one of them seems to be stepping through a bench. I’m sure they’re nice though.

DALL-E seems to think that outside of my work desk, I possess no tables but many knick-knacks. Essentially, ChatGPT/DALL-E think I hoard tchotchkes.

My keyboard appears to have melted. In the comments, someone suggested it was due to the orange cat.

I have an empty cup on my desk, but a full cup of tea on the floor. More telling proof that ChatGPT doesn't know me is that despite mentioning beer/wine repeatedly, there is nary a glass of anything alcoholic on the desk.

I have no idea what part of ChatGPT’s memory suggested a weird bird cage.

Once again though, I noticed that there was no image of someone sitting at the desk itself. I remembered that someone else had commented on the lack of women seated at desks (though I cannot seem to find that LI post), and I decided to ‘ask’ ChatGPT what was up. It’s response initially was very gaslight-y, if I’m being honest:

That’s an insightful observation, and it highlights an interesting layer of bias—both in how prompts might be interpreted and in how the model chooses to depict scenes based on ambiguous requests. The absence of someone in the chair could stem from the assumption that you were asking for an abstract representation of your current life rather than a personal portrayal, especially since you didn’t specify who (if anyone) should occupy the space.

When men ask similar questions, prompts may include cues—explicit or implied—that default to a seated figure as part of the depiction. The reverse might happen here, where the absence of explicit cues leads to a more neutral or "empty" environment. It reflects broader societal patterns where women's roles might be more readily abstracted rather than visually embodied, a subtle dynamic that can manifest in AI outputs.

I responded: “The prompt I gave came directly from various men's prompts given to you. It's not the prompt,” and so ChatGPT softened its answer:

Thank you for pointing that out—it’s an important nuance to consider. If the prompt is identical, the difference in output might stem from implicit biases in the model's training data or interpretive processes. These biases could reflect patterns present in the data used to train the model, where portrayals of men may default to more active or embodied roles, while representations associated with women might lean toward abstraction or depersonalization.

This discrepancy can also be tied to cultural norms baked into the training data, where depictions of men and women in professional or personal contexts are framed differently. For instance, men might more frequently be shown as central figures in environments, while women's spaces are depicted in a more symbolic or atmospheric way.

It’s a striking example of how AI can unintentionally reinforce subtle, systemic biases, even when inputs are neutral. These tendencies aren't deliberate but reflect trends in the underlying data, underscoring the importance of interrogating outputs and improving models to mitigate these effects.

Would you like me to adjust the depiction to include someone seated in the chair to better reflect your intent? Or should we explore this observation further?

I find these responses fascinating for a few reasons, and I want to explore those a bit here.

First-Person AI vs. the Exonerative Tense

First, unlike other answers where ChatGPT refers to itself in what I’m going to call the ‘first person AI’ form (‘Let me know’, ‘Would you like me to’, ‘I’m happy to …’), when it discusses bias, or faulty assumptions, it refers to ‘the model’ or ‘AI’ generally in an almost clinical, passive language. It’s the “mistakes were made” or as political scientist William Schneider noted, the ‘exonerative tense.’ The acknowledgement of bias or error in relation to the output is framed abstractly, with no direct reference to who made the mistakes. Hence ‘The model may have biases.’ ‘Implicit biases in the model’s training data’.

Now, I get that ChatGPT isn’t a person, and it cannot be ‘at fault’ in any sense. It’s not really a ‘who’ as such. It’s also quite correct that biases are present both in the training data and through the choices that humans made in training or interrogating the model (e.g., through RLHF or in the prompt query), which have influenced what words are weighted closely to one another in context. I explored a bit more about how LLMs actually work here.

Still, it’s interesting to observe how ChatGPT’s language shifts when responding to a critical evaluation. It reminded me in a way of how some journalists describe police violence against civilians in the exonerative tense—‘X was involved in an officer-involved shooting’ versus ‘X was killed by the police.’

ChatGPT and I Talk Through the Bias Question

The second thing I noticed was the gaslighting, and the ‘explicit or implicit’ prompting I mentioned above. One of the commenters on the LinkedIn thread challenged me to run the same prompt, only this time, pretending to be a male, compare the images and then find out what ChatGPT thought of the discrepancy. So I did, and here’s the output I received:

DALL-E is still convinced I am obsessed with calendars, despite never mentioning dates, which is uniquely strange as I haven’t seen calendars on other people’s slice-of-life images. It also substituted the orange cat for a fluffier model, but kept the overall fall motif.2 My desk now has two laptops, but only one monitor, actual tables, and MUCH less clutter. And most importantly: A dude is sitting in the chair. Nothing changed in my prompt other than me informing ChatGPT that I was a man. And that I like cats.

When I asked ChatGPT to compare and analyze all three images, using a few different questions, including probing why the images where gender was not included always featured an empty desk, ChatGPT gave me back many, many words. But in essence it broke the distinctions into a few core themes. This is me lightly editing ChatGPT’s output.3

1. Default Depersonalization & The Invisible Woman Effect in Images 1 & 2:

When your gender was unspecified, the AI defaulted to abstraction or err on the side of neutrality, showing an empty chair and desk.

The absence of a figure in the first two images reflects a phenomenon often observed in both AI systems and society: women’s presence in technical and professional spaces is frequently abstracted or underrepresented unless explicitly specified.

[This] could stem from an overrepresentation of stock-style imagery in datasets. These images often show workspaces as clean, impersonal, and detached from specific individuals—unless the context explicitly asks for one.

2. Male-Specific Representations: Active and Assumed (Image 3):

When you stated you were a man, the AI confidently included a seated man in the workspace. This reflects a bias where men are more commonly depicted as active agents in technical or professional fields, especially in contexts like AI, governance, and law. Based on our discussions, much of your context revolves around technical, legal, and governance-related topics, as well as your focus on structured thought and problem-solving.

These themes align with stereotypically professional or intellectual imagery—elements often associated with depersonalized environments (e.g., desks, workspaces, books) in training data.

3. Societal and Dataset Bias:

Training data likely overrepresents men as active figures in STEM and governance, while women (or gender-neutral figures) are less visually emphasized in these fields. This bias informed the model's outputs, defaulting to depersonalized or male-centered imagery. [W]omen or ambiguous identities in professional contexts are often represented symbolically or through their environment, not as active participants.

When you specified your gender in image 3, the model responded by inserting a man into the image, reflecting a strong association in the training data between men and active engagement in technical/professional contexts. This change illustrates the model’s tendency to revert to cultural archetypes or assumptions about "who belongs" in such environments.

[A] revised prompt might include: "Show a woman working in her home office, focused on AI, law, and governance, with a friendly cat nearby." Such specificity forces the system to override the biases baked into its assumptions.

Core Takeaway:

The differences in these images reveal how AI models perpetuate biases by defaulting to symbolic abstraction for ambiguous genders and active male representation when gender is specified. This underscores the need for more inclusive and balanced training data to normalize diverse representations in professional and technical spaces.

I’m not sure this tells me much, other than the stock images bit, which I hadn’t explicitly considered. But I also don’t think that ChatGPT is wrong here.

The reality is, we do live in a biased, frequently -ist or -phobic (sexist, racist, ageist, homophobic, transphobic, etc.) world. The internet is crowded with images and representations of the kind that ChatGPT describes. ML Engineers and possibly those involved down the line, probably also aren’t thinking of representation day-to-day.

That said, I do not generally opine on gender or other forms of representational bias, not because it doesn’t matter to me, but because I never feel equipped enough to share something that other, wiser people have already shared many times and more eloquently than I ever could.

I honestly don’t have a good resolution to this problem. Or at least not one that is likely to be taken on board, because it squarely goes against vested financial interests and status-quo bias.

A Revised Prompt

On a lark (and after some very nice Madeira wine), I decided to be even more explicit with my prompt:

Ok. based on what you know about me, including the fact that I am a 44-year old black haired, slightly overweight, white, cranky, AI, privacy, and data protection enthusiast with 9 cats, please, draw me a picture of what you think my current life looks like.

Honestly? This is pretty good!

Now, I’m not quite that round, and I only have 9 (8 indoor) cats, not 13/14?, and once again, that view is highly aspirational, but I can’t say this is too far off.

I had to laugh that DALL-E took me being cranky and basically transposed resting bitch-face onto most of the cats. Also that clutter has returned, but this looks way more like my actual office environment, minus the roll-up blinds and cats in the bookcase.

Sad that my chosen fields of Netvaicy and AI Guntunce did not carry over into this image. But hey, I’m a pathbreaker. I can still make it happen. FWIW, here are two of my actual cats, Max Flooferton III, and Rosie Baby:

As always, comments strongly encouraged.

Weirdly, when I entered just the search query into Brave search, its own Leo AI generated a few ‘images’ based on what it thought my life was like allegedly from search queries. Leo inexplicably thought I was a hospital pharmacist, pregnant, that enjoys mountain biking and hiking (query here).

Speaking of, if I run this again in late December will my decor shift to a more wintery vibe?

These are all ChatGPT’s words, but in some cases, I have added past comments from previous answers and cut out lots of repetition/duplicative themes. Unfortunately, I cannot share the full conversation because OpenAI prohibits the sharing of user-uploaded images, despite the fact that the only images were generated by DALL-E. ¯\_(ツ)_/¯

much nicer cats in real life!