From Frustration to Automation: How I Built a LLM-Powered Legal Case Summarizer

Programming generally scares me, but with the combined power of ChatGPT, Python, Obsidian and annoyance, I managed to write a pretty rad legal case summarizer anyway.

I’m going to tell you a little secret about myself: I am annoyed easily.

I realize that for some of you, this won’t be very revelatory. For example, my poor, long-suffering husband, friends & family, as well as some former colleagues and lovers, all know that my fool-suffering threshold1 is basically zero:

But, I make up for my seemingly endless low-level rage with the world by forcing myself to learn things that help me to be less annoyed. I believe the experts call this ‘growth,’ or maybe life hacking.

One way I do this, is by trying to understand why a seemingly annoying thing is the way it is. Understanding why often helps me to come to terms with what otherwise feels like arbitrary bullshit and unnecessary fool-suffering. Sometimes, of course, the answer is that shit is really complicated, and I then am less annoyed because I at least understand that there are reasons.

For example, I recently had a bit of a tiff with a certain department within the Irish government. In short, they told me that my birth certificate was not authentic and that I should just send them a real one instead. Critically, they did not tell me why my birth certificate, which has been widely recognized by other governments as being legitimate, was deficient. They only said that I needed to conjure up one that met their undisclosed standards.

Now, a normal person might have just requested a new birth cert and hoped for the best. But, I am not normal, so instead, I wrote an angry letter with specially-crafted words like ‘irrational and unreasonable’ and ‘contrary to the principles of natural justice.’ And guess what? My letter worked. Suffering avoided.2

But my low fool-suffering-threshold doesn’t end with people and opaque bureaucracies. It also extends to other, more mundane things, especially tasks that are repetitive or boring, or consume lots of my time but still need to get done. Especially, if I think said tasks can be automated in some way.3

Other people have also come to this conclusion, and for the most part, they become programmers or engineers. But, for like, a dozen different reasons, I have come to realization that despite my deep appreciation for automation and engineering, I do not have the aptitude, energy, or physical or mental capacity to become a programmer or engineer, any more than I could become a world-famous concert pianist. I have spent almost three decades of my life trying to learn both the piano and programming, and while I can passably read and play music, I cannot make my brain understand or remember how to generate the types of magic incantations necessary to get a computer to do what I want.

And so today, I’m going to tell you a story. This is a story about how I used LLMs to help me program and unblock what has been a persistent problem for many years, and in so doing, has made me a bit less annoyed with the world. Maybe even happy?

There are Too Many Cases, and Not Enough Time to Read Them All

When ChatGPT burst on the scene in 2022, a fire went off in my brain. I saw an immediate opportunity to solve a major pain point in my life. Namely, there are dozens of dense, but highly consequential legal opinions, judgments, and guidance materials put out into the world all the time.4 I want to read and make sense of everything that comes out but I cannot, because I also like sleeping, and spending time with cats, and actually going outside.

As I mentioned above, I am not a programmer by temperament, so using an LLM to help me write some code seemed like a perfect solution to what had been a years-long blocker for me. To test the waters, I asked ChatGPT to write a very simple Python script for me that would read some text, store that information in memory, make a request to ChatGPT with my prompt + the text, have ChatGPT perform its LLM magic, and send back a response that was displayed in my terminal. The first text samples were small, and the first prompt was very broad: ‘Please summarize this document.’

Carey’s Programming Tip #1: Set low expectations & start small and simple. Small and simple is smaller and simpler than you think. You’ll thank yourself later.

After a few false starts and some back-and-forth haggling with ChatGPT (at the time, ChatGPT 3.5), the program actually worked! It could read the text, send it off to the OpenAI servers to be summarized, and respond with an outputted summary.

The next step was to actually get it to do this for a case. The thing I quickly learned is that LLMs have a finite amount of space for doing what’s asked of them. This is known as the context window, and for ChatGPT 3.5, the context window was around 16,385 tokens. Each token is about 4 characters of text, so we’re really talking about 65,540 characters, which is 10,083 words, or roughly 46 pages of text. That seems like a lot until you realize that this context window covers everything from the prompt, text to be analyzed, reasoning/processing, and output.5

Exceeding the context window was an early and persistent problem, and it usually led to one of two results. If I was lucky, I’d get a very short, usually truncated, crappy summary. But usually, I’d just get an error saying that I’d exceeded the token limit. Whoopsie.

“Surely,” I thought to myself, “some for-reals programmer has come up with a solution to this problem.” And someone had. To get around the context window problem, I’d need to have my program break the document up into smaller chunks of content, summarize each chunk individually, store the chunked summary in a file somewhere, and repeat. Once ChatGPT had summarized the last chunk, it would then need to go back and summarize that file where the stored chunk summaries were, and save that to a file.6

Carey’s Programming Tip #2: Assume that your pain is shared pain, and that someone else has probably solved at least part of your problem.

The author had also helpfully put his code up on Google Collab, which is a great environment for learning how programs actually work, even if you’re a non-programmer like me. It’s an interactive environment where you can run and tinker with discrete code slices individually to really see how things work and break them in a gentle and easily observable way.

Iterate, Test, & Repeat, Until the Heat Death of the Universe.

Once I had the chunking problem mostly sorted, I moved my program out of the Collab environment. That was a fun exercise in frustration, because I learned that once I moved code out of the safety of Google Collab, into the lawless wasteland that is my Windows desktop running Ubuntu Linux under the hood, I would have to install many, many things to make the computer do what the Google Collab environment did automatically.

I will not explain everything I had to do, but I will share two Python-specific problems I faced:

There are different versions of Python, and stuff written in the older version of Python usually do not work in the new Python without varying degrees of effort. And it almost never works going backwards, because, fuck you that’s why.

A fresh Python install is fairly bare-bones7, and my scripts required a number of packages to run properly. But it’s Python, so that means I also would need to install all the package-specific dependencies. And sometimes, because of #1, those dependencies can’t really be satisfied because no one has updated the library for like 7 years. Welcome to dependency hell.

Carey’s Programming Tip #3: Be prepared to enter dependency hell at least once during your journey. Learn to process the stages of grief quickly.

Once I escaped dependency hell, ChatGPT and I got back to work. Now, unlike for reals programmers like my husband, I did not really have a software development environment. My ‘development environment’ consisted of a combination of Notepad++ in Windows, and the Ubuntu command line which I used to test/run my code. I remember many times where Husbot would wander into my office, either to bring me a motivational beer or remind me to eat, or to offer assistance when ChatGPT and I had really gotten stuck. He would peer over my shoulder, frown, and silently judge me for my banjanxed setup, and my sheer incompetence when it came to basic software engineering practices.

Take for example, what I called ‘version control’. My approach consisted of saving slightly different versions of whatever I was working on using delightfully unhelpful names like CJEUCaseSummarizer.py, CJEUCaseSummarizerOld2.py, CJEUCaseSummarizerv3_USE_THIS_ONE.py, , etc. Eventually, even I got confused as to which version did what, or which version was working. Oh, and I did this on different laptops, for reasons.8

Similarly, my commenting process was also unforgivable, and it still needs work, if I’m being honest. Adding descriptive comments in code is valuable, both to provide clarity to others on why specific choices were made, but good comments are also helpful when it comes to looking back at your own code, when months or years have passed and you no longer remember why you did that thing. So, for example, instead of ‘add UTF-8 support’ try something like

# Open and read the file. Add UTF-8 encoding to avoid the always annoying Unicode ''charmap' character maps to <undefined> error' when processing cases like Gäñă v. Ḍèëç. Carey’s Programming Tip #4: Your future self will be eternally grateful for having a good version control process and leaving descriptive, useful comments and not shit like #LOL I Fixed the Thing.

Fix Yo' Prompts.

Even after I got my program up and running and it was turning out halfway decent summaries, I still had to contend with another problem: the generated summaries were still pretty bad.

Some of this was a byproduct of the model (ChatGPT 3.5 was notorious for turning out garbage) + my poor programming skills, but I also realized that my early prompts did not provide the LLM with enough structure and detail on what I actually wanted to see. Asking the LLM to ‘summarize this case’, with no additional context or clarification consistently led to very generic, unhelpful answers. I’d forgotten a very important programming tip:

Carey’s Programming Tip #5: Provide detailed prompt instructions. Describe exactly what you want to see, step-by-step, even if it seems obvious. Pretend you’re instructing an interdimensional alien on how to make a peanut butter & jelly sandwich.

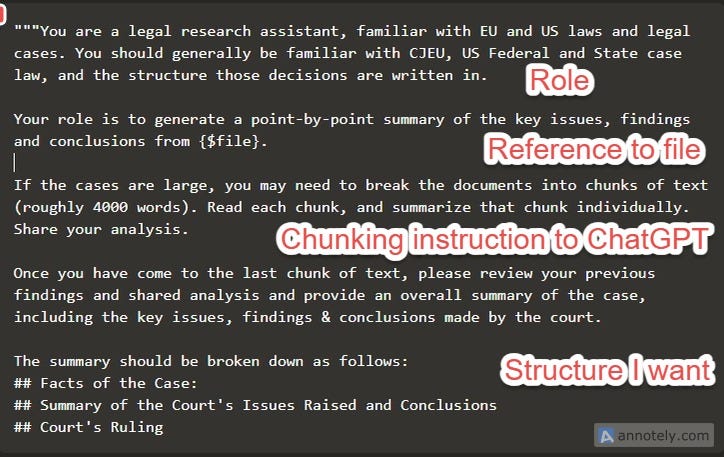

To improve my output, I started by giving ChatGPT a role, and a more defined idea of what I was looking for. As the models improved, I also switched from broad language like ‘court cases and decisions’ to more granular, jurisdiction-specific decisions and analysis, because courts and regulators do not write decisions/opinions/guidance in exactly the same way. I also provided concrete guidance on where to look, how to handle large cases, and what I wanted the LLM to prioritize when summarizing the case. Finally, I was descriptive about how I wanted the output to be presented. My prompts went from “summarize this case” to something more like this:

I discovered that one way to cut down on hallucinated output, is to instruct the LLM to provide citations, and identify where the summarized point, holding, or quoted language exists in the document. CJEU cases make this easy, because each paragraph is numbered. However, this is a bit harder when paragraph numbers don’t exist, and sometimes I needed to think creatively (by telling ChatGPT to cite to headers, page numbers, or sections).

Telling the LLM to provide a citation makes it much easier to read over and check the output, but it has the bonus effect of reminding the LLM to not make shit up. While I haven’t seen this particular suggestion in prompt engineering guidance, the folks over at Anthropic have picked up on this — which is why they recently added a Citations feature to their API.

And that leads me to my final LLM-specific suggestion: Play around with different providers, models, and model families, and be prepared to customize your prompt to draw on model strengths and correct for model weaknesses. I have tweaked my prompts for all the big boy models (Claude 3.5, ChatGPT (various), Gemini 1.5, DeepSeek), and I anticipate that I’ll need to keep doing this, especially if I move to local LLM models.

Break It Up & Keep Iterating

Over the last twoish years, I’ve also begun to discover another crucial programming hack:

Carey’s Programming Tip #6: Don’t boil the ocean with a single program or script. Write code that solves a specific problem, get that code to work on its own, and then work on chaining programs together.

Once I got my case summarizer program sorted, I realized it would be rad if I could also automate the process of grabbing new cases and doing a quick relevance/sanity check before summarizing. Most courts hear loads of different types of cases, and I personally don’t care about 90% of them. So, ChatGPT and I created two different Python scripts that do this — one to check the Court of Justice’s RSS feeds, and another to ping the British and Irish Legal Information Institute’s (BAILI) Irish RSS feeds.9 I run these a few times a week.

Relevant cases get passed to a ‘Raw’ directory, and then my CaseSummarizer script goes to work and outputs the cases to a ‘Summarized’ directory. I then manually move the summarized cases into a folder on Obsidian (my second brain program of choice), and then invoke two very cool community-developed scripts on each new case. The first is a highly customized version of Obsidian-Linter for handling metadata, and the second is a lightweight tagging tool called Obsidian-Virtual-Linker, which helps me find relevant cases and other documents related to specific topics. The end result looks something like this:

After things are bagged and tagged as it were, I do a few light edits. For example, I manually edit the title because the CJEU titles consist of a string of text and numbers, and not the case ID. I also tweak some things that Linter gets wrong, and I do a spot check of the case itself to see if the summary actually matches reality. Sometimes, I have to edit the summary, but as models improve and my prompts get more specific, I’ve noticed that I’ve had to do this less often.

I still have a number of things to improve on before I consider this even remotely done.

I need to improve the workflow so that the case-checking scripts run on their own and only download for-reals new cases. Right now, they download everything that’s on the RSS feed list, which isn’t what I want.

Once I solve that problem, I need to have a way to automatically call and run the summarizer script, and tell me when it’s done.

I need to find a good local model to run for case summarizing. I’m not very impressed with Llama 3.2, but my machine is not beefy enough to play with models that require fancy things like GPUs. I may try my luck with DeepSeek-R1, but I’m still a little iffy on the whole China/ToS thing.

I probably should create some sort of frontend so I’m not stuck using the command line forever.

I might expand this to other jurisdictions & build in some functionality that would allow me to more easily customize the case download process/RSS feeds for other legal interest areas. I keep talking to people who would love to see a tool like this that could do quick and dirty summarization for other areas of law.

I need to figure out a better solution for my Baili conundrum. Once that’s solved, I could potentially turn this into a service for clients.

Yeah, so that’s it. That’s my whole process, and what I need to work on next. I hope folks enjoyed this little jaunt into how my lack of fool-suffering + ChatGPT led me to actually finding a solution for a very persistent problem I’ve had for years.

If you’re a non-programmer type, but have similarly annoying problems you might like to tackle, I hope my process helps. Between LLMs, and all the low/no-code and automation tools that have been developed within the last five years, plus amazing user-friendly development platforms like Google Collab, it’s never been easier for non-engineers to bend computers to their will.

As always, leave a comment if you have tools you recommend, like what I said here today, or think I got things completely wrong. If you’ve got thoughts on how I can improve my specific workflow, hit me up at carey@priva.cat, or slide into my DMs.

I’m convinced that when I die, the handful of people who attend my funeral will spend the whole time cracking jokes about all the myriad things that I bitched about while on this earth. Some people who will read this post probably have an hour’s worth of material already saved up for the occasion. You know who you are.

Special thanks to Simon McGarr for the magic legal words I needed. I still owe you.

Early on in my relationship with Husbot, who is a for-reals engineer at a Fancy Big Tech company, this used to be a bit of a struggle. I had MANY ideas about what I thought could be automated, but lacked the tools or skill to make it happen. Often, I’d ask him for assistance with programming, and inevitably, this would lead to deep philosophical discussions and/or fights about whether I actually needed to automate in the first place, or if I should just suck it up and do the damn thing manually already.

He’s not wrong — a good rule of thumb for automation is, if it takes you less time to do the thing (like manually summarizing 20 documents for a single project, or data cleansing a small Excel file) than it would take to write the code to do the thing, you should probably just put on your big girl pants and do the goddamn thing. But, if the task is something you have to do regularly, or consists of a large volume of stuff $that would take a great deal of time to do, like summarizing 500 cases a month, and it’s a good candidate for automating, then by all means, automate that bad boy.

I blame law school and legal training in general for the inability of most courts and regulators to get to the point. I know that sometimes, you really do need 50 or 75 pages to lay it all out there and explain the factual and legal complexities, but sometimes, I swear to god, it strikes me as less motivated by legal necessity, and more like a diarrhea-of-the-keyboard problem. Or a little sado-masochistic torture against law students and lawyers, as payback for what was done to them as law students.

For those who don’t read cases, I grabbed a few recent, but fairly standard-length cases from the EU Court of Justice, and pasted it into OpenAI’s Tokenizer. It reported that one case was 10,228 tokens long, another 8,094, and a third, 11,340 tokens long.

When I shared this with Husbot, he kind of laughed and explained that when Python came out 30 years ago, it was considered a “batteries included” language, particularly compared to older languages like C, which required you manually install nearly everything.

There are two reasons my version control system sucks. The first is that while lawyers are very good at marking up/redlining documents, version control systems do not really exist. Partly this is because it’s hard to have a shared versioning process that allows people to securely share say, Word documents/PDFs across different organizations.

But the other reason my version control process sucked is that I am pathologically incapable of learning how to use git properly. The git command line syntax and documentation is unintuitive, and at least the Windows GUI simply DOES NOT WORK. Git is so bad that there are even an appropriate XKCD comics about it.

I have since found a GitHub client which I can mostly work with, so I now have better version control process.

While I am mostly unburdened about pinging the Curia database, I recognize that I am in a murky spot concerning cases on Baili. Generally-speaking, there are broad rights to use and reproduce court decisions in many jurisdictions, including the US, Ireland, and from the Court of Justice in the EU. Direct access is fine, as long as I attribute properly, which I do, because everything on the original case stays as-is.

However, because the Irish Court Rules are annoyingly behind the times, Irish court cases are only considered ‘official’ if they are published as non-OCR’d PDFs, and this tends to break LLMs. Baili has some magic that will faithfully convert cases from the devil spawn PDFs into parseable html. Until I can figure out a proper solution, I do not plan to commercialize this tool, and will continue to be sparing in my use of Baili cases.