Political Grok-Pocrisy

Grok is spewing out CSAM on X, and yet politicians are happily still using the platform anyway. The problem is, they're also passing draconian laws.

There has been a big push over the last year or so by politicians around the world, clamoring loudly for legislation to force draconian (and impossibly ineffective) content moderation rules, age-restricted access to the internet, and wholesale surveillance of encrypted communications. The proposals and laws range from mandates requiring online providers to block the sharing and dissemination of “illegal content”, hate speech, misinformation, to laws banning and criminalizing non-consensual sexual imagery and deepfakes.

Then there’s the more traditional ‘protect the children’ laws imposing age-verification (where everyone, kids and adults alike, must verify their age via selfies and government IDs), parental consent requirements, and increasingly, growing interest in banning minors (and anyone perceived by automation to be a minor) from parts of the internet entirely.

Finally, there’s the truly ominous stuff: client-side scanning of all encrypted communications. Many people, politicians and media alike, uncritically assert that these laws will only help protect children and vulnerable people from harm. And that such surveillance will only be used in a targeted fashion, and as a “last resort” against the bad guys.1

The sheer avalanche of laws and proposals in the last year is frankly, staggering. Here are just a few:

Australia passed the world’s first social media ban, which introduces a mandatory minimum age of 16 for accounts on certain social media platforms like X, TikTok, Snapchat, Instagram, and YouTube. It came into effect on 10 December 2025, and there is no parental override.

In the EU, lawmakers have been eager to push forward bills and proposals that would do everything from monitoring end-to-end encrypted chats, to banning under-16s from the internet entirely. And then, of course, there’s the Digital Services Act, a sweeping law that tries to be an everything-bagel for every bad thing on the web.

Most controversially, all but four countries (Czech Republic, Italy, Netherlands, and Poland) in the EU Parliament endorsed a Danish compromise version of Chat Control. Unlike previous iterations, the new version would no longer force chat and other service providers to indiscriminately scan and report suspicious conversations (including end-to-end encrypted chats) on a user’s device. However, the agreed-to language strongly encourages service providers to do so voluntarily. Patrick Breyer, a former MEP for Germany, has been opposed to this since the beginning, and has a great analysis of the harms from Chat Control here.

In November 2025, EU MEPs overwhelmingly voted on a non-legislative report which calls for minimum-age requirements for social media (akin to Australia’s over-16s law), ‘age-appropriate content’, and age-verification obligations (source: EU Parliament News).

And finally, there’s the Digital Services Act, a broad law which entered into force in 2022, that enhances transparency and user rights obligations on intermediary service providers, but also attempts to regulate everything from deepfakes and “illegal content” to hate speech and misinformation. Ironically, the EU issued its first fine of €120M in December 2025 against X for violating various aspects of the law.

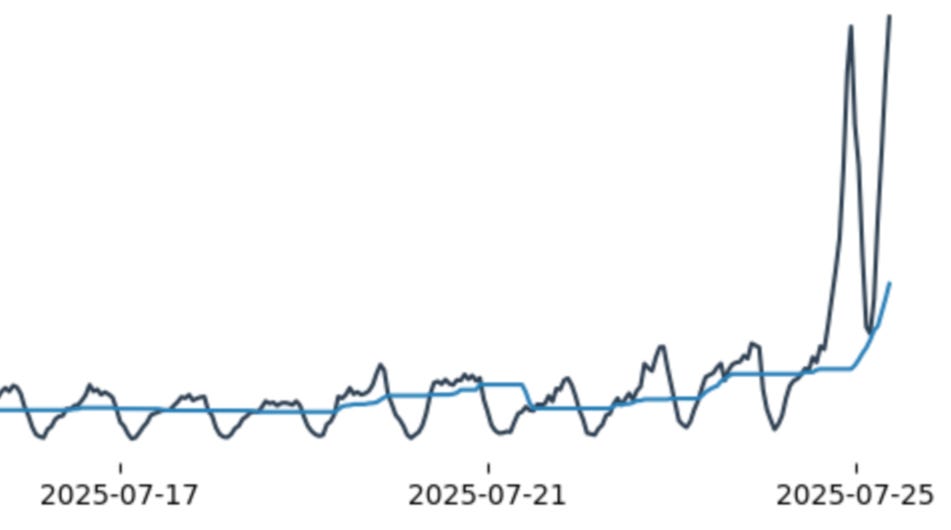

In the UK, of course, there’s the Online Safety Act of 2023 and the Age-Appropriate Design Code 2021, both of which are designed to make the internet safer for children, primarily by imposing risk assessments and age-assurance obligations. Companies within the scope of the act, including porn sites, social media platforms, and search engines, must keep kids out and away from porn, as well as material that promotes unhealthy eating, suicide, or self-harm. Unsurprisingly, ProtonVPN reported a 1400% traffic spike from UK IP addresses as the law went into effect. Also, implementation of the law has been a disaster.

In the US, state lawmakers in over a dozen states (including California, Florida, Georgia, Texas, Mississippi, Ohio, Utah, and Tennessee) have passed laws attempting to restrict minors’ access to social media, either through outright bans or parental permission requirements by using age-verification and age-gating (source: EFF).

Congress also passed the TAKE IT DOWN Act in 2025 to combat non-consensual intimate imagery (NCII). The law requires websites to remove NCII within 48 hours of it being reported. While this is great in theory, the law is so broadly written that many civil liberties groups worry that this will be abused by bad-faith actors to remove lawful content they don’t like, as is already the case with the Digital Millennium Copyright Act. Except unlike the DMCA, there’s no put-back clause, or any protections against bad-faith removal requests.

Now, I’m going to state the obvious up front: most of these laws start out with noble intentions—the internet is ugly, people are terrible, while social media, deepfakes, and misinformation are rampant. Politicians want to fix the problems. But my quibble is with how they’re doing it.

So, why am I talking about all of this here today, you might be wondering?

Two words: Grok and hypocrisy. Or maybe just Grok-pocrisy.

xAI, Non-consensual Images, CSAM & Hypocrisy

Over the last week or so, people discovered (again) that X, and in particular, X’s chatbot Grok, has become a veritable garbage-making factory as it spews out hundreds, if not thousands of images of actresses, regular users, and even children as young as two, in sexualized poses, varying states of undress, and being assaulted or abused. And of course, because it’s X, none of this is consensual, and most of it targets women and girls. This has all been going on since around December 28, according to press reports.

While Grok’s ability to generate awful content isn’t new (or at this point, surprising), the frequency of sexualized images, child sexual abuse material (CSAM) and NCII was staggering—with one site (Copyleaks) reporting that thousands of images were being generated by Grok at an estimated rate of one image per minute(!) 2

According to Copyleaks and other news sites, the chatbot fulfilled prompts asking it to alter the profile photos and posted pictures of women and children, for example, by putting them in bikinis, sexualizing physical features, removing clothing (nudifying), and showing women in states of distress, abuse, or in the back of trunks.

While Grok (the AI) eventually “apologized” for its sins, Elon Musk, in typical techbro-libertarian fashion, thinks it’s all very funny, actually. xAI was similarly mum on the subject, though they promised to at least take the obvious CSAM down. The Verge reported that Elon even participated in the “funny” joke by putting actor Ben Affleck in a bikini.

For you see, boys and girls, X is a free-speech zone, and that means everybody just needs to be cool with Grok generating nonconsensual graphic images of people, in addition to all the misogyny, racism, and white nationalism that the site is already known and loved for. I guess this is what conservatives call #winning and #owningthelibs. Me? I call it a garbage heap full of terrible people.

Let’s Talk About Hypocrisy

Reading about this made me angry. Here is a situation that, in normal times, would have been shut down immediately on old Twitter. People would be fired. Hearings would be held, and CEOs would be lambasted. At the very least, there’d be way more media attention on all of this.

After the anger (sorta) subsided though, it got me thinking: For all the rhetoric and constant cries for new, stronger surveillance laws, ostensibly to protect the most vulnerable and reign in oligopolist Big Tech, how many politicians continue to hang out at the Nazi Bar that is X? And how many keep posting—no doubt fully aware of what’s going on around them?

CSAM should be a five-alarm fire by governments. Elon shouldn’t get a pass, because what’s happening on X is criminal not just offensive, harassing, or annoying.3 IMHO, X itself should be shut down—at least until Grok is trained to be less willing to honor the whims and fetishes of the worst humanity has to offer.

So far, only a few governments have bothered to say anything at all:

India’s IT minister demanded answers on Friday

French authorities promised an investigation that same day

Malaysia Communications and Multimedia Commission have “taken note” with “serious concern” over the matter, but acknowledged X wasn’t a regulated entity

The UK’s Tech Secretary Liz Kendall called for X to ‘urgently deal’ with Grok being used to create NCII of women and girls, while Ofcom launched an investigation

One of Ireland’s MEPs, Regina Doherty (a member of the Internal Markets and Consumer Protection Committee, and daily X user) posted on X a letter she wrote EU Commissioner Henna Virkkunen (who is Executive VP for Tech Sovereignty, Security and Democracy, and also a daily X user) urging the Commission to investigate.

Recall that the EU Commission is the sole body responsible for investigating violations under the DSA.

At a minimum, anyone with any moral decency (and in particular, elected leaders) shouldn’t be supporting a service that is actively harming their constituents. X should be treated like a nuclear waste disposal site—not as a thing of honor, but as something to avoid.

And yet… for all the political grand-standing about protecting people, especially children, the number of politicians who maintain active X accounts in the EU is staggeringly high.

But how high? I’m glad you asked.

Key Findings

I started with the MEPs, particularly those who were in the committees responsible for drafting the DSA, the Directive on Combatting Violence against Women, and an earlier version of Chat Control, which proposed making client-side scanning of encrypted chats mandatory. I also reviewed who signed on to the recent age-verification proposal I mentioned above.

To speed things up, I first pulled the EU Parliament committee list for MEPs from the three primary committees responsible for each of the initial proposals—Security & Defence, Civil Liberties, Justice & Home Affairs, and the Internal Market & Consumer Protection Committees.4 Many MEPs list their Twitter handles on their profile pages, and so it’s a relatively easy step to get a computer to scrape that data directly from the individual committee pages. I had Claude write a simple script to do so. That gave me at least an indication of how many of the MEPs had Twitter/X accounts, at least if they were public. Of the committees I was interested in, the answer was 190 out of 390 (or 56%).

I then wanted to see how they voted. To do this, I grabbed the voting data from howtheyvote.eu on the four bills & proposals I mentioned above, which includes vote breakdowns for all MEPs.5

I had Claude extract this data and map it against MEPs with active X accounts, and dump the details into a csv file, which I then reviewed. The matching process looked something like this:

1. Load all MEPs with X accounts from the csv

2. Fetch voting data for each bill via the respective howtheyvote.eu URLs

3. Match MEP names between datasets

4. Flag all MEPs who voted FOR while having X accounts

5. Generate report with vote breakdown

6. Do some sanity checking of a sample of outputs, to make sure Claude wasn’t hallucinating.

Here’s a link to the data for anyone who’s interested.

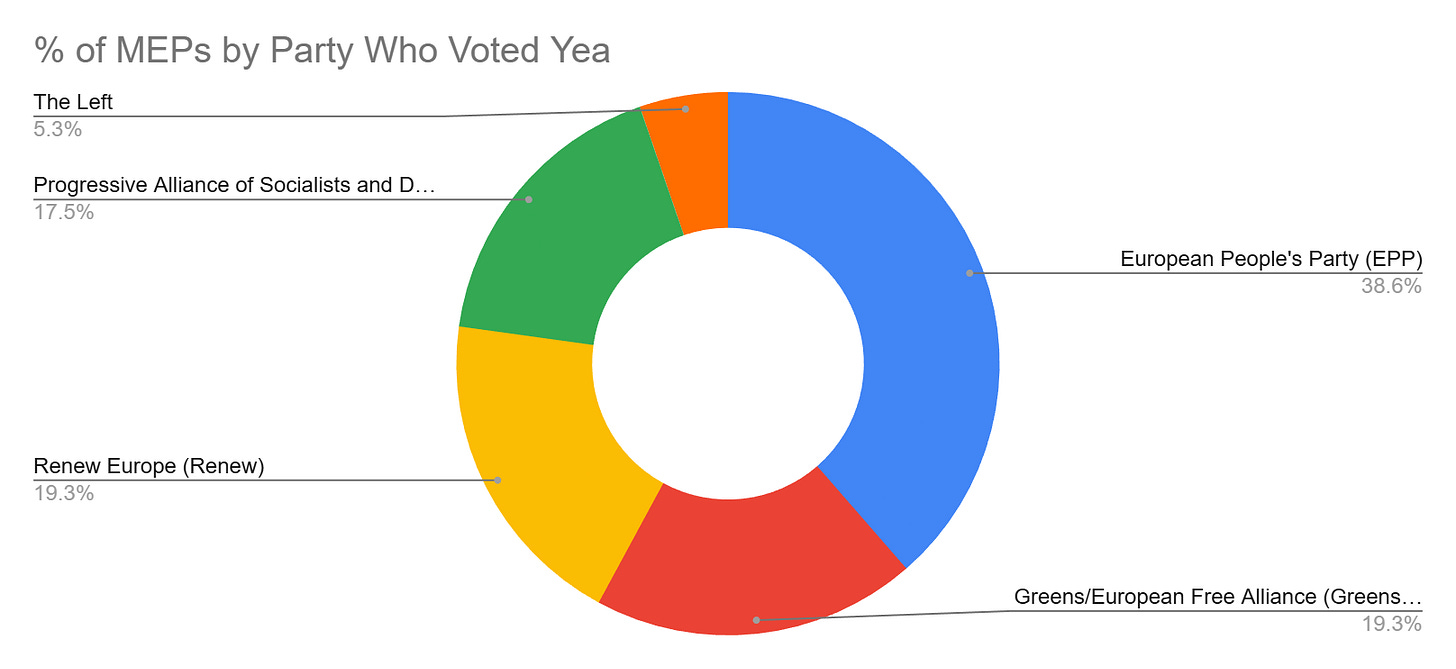

First off, there are a lot of MEPs (159) who supported at least one of the bills or proposals I discussed above, and still maintained active X accounts. Fifty-seven (57) of them voted yea on all four bills. The largest bloc leaned centre-right (EPP with 22 votes and Renew with 11 votes). The centre-left/left-wing was also well-represented with 11 Greens voting yea on all bills and 10 Progressive Alliance members. Rounding things out we had the Left (far-left) with 3 votes.

For the EU Council, there are 29 members—27 representing the heads of each member state, plus Ursula von der Leyen (President of the EU Commission) and António Costa (President of the Council), who are non-voting members. All but three members (the PMs for Slovakia, Bulgaria, and Slovenia) maintain active X accounts. Most post daily. For what it’s worth, all 27 members of the EU Council voted for the DSA (though most were predecessors of the current members).6 As I mentioned above, all Council members bar four, voted in favor of the Danish compromise CSAM bill (Czech Republic, Italy, Netherlands, and Poland). All member states, bar Denmark (who did not participate) voted affirmatively for combatting violence against women.

Here’s My Call to Action

I’m fairly certain few will read this, and this will not affect any politician’s behavior. But it should. It’s the height of hypocrisy and moral bankruptcy to patronize a business or establishment whilst passing laws that claim to be about stopping the very thing those organizations stand for. By remaining on X, politicians signal that they are fine passing laws that regulate others’ behavior, even if they have no self-control of their own. Rules for thee, not for me. Or Elon.

They are no different than anti-porn crusaders who have pornhub.com favorited in the bookmarks and are on a first-name basis at the local strip club. Or a leaders who push to revoke citizenship to the non-native born, excluding their own friends and family.

As the old Sandia Labs Nuclear warning goes

This place is not a place of honor.

No highly esteemed deed is commemorated here.

Nothing valued is here.

This place is a message and part of a system of messages.

Pay attention to it!

Sending this message was important to us.

We considered ourselves to be a powerful culture.

X is not a place of honor. It’s a toxic-waste zone of depravity, bots, CSAM, NCII, and trolls. There is nothing of value on X. The politicians who inhabit X should lead by example and leave X/Twitter, OR at least explain why X gets a pass while they vote for strict measures elsewhere that will affect millions of people.

The public deserves accountability and consistency from their elected representatives. If you’re interested, you can find and contact your MEP by going here: https://www.europarl.europa.eu/meps/en/home. My MEPs will be getting a call.

This, of course is nonsense. The moment a backdoor is mandated to end-to-end encryption is the moment that every government, democratically-elected or not, compels providers to start monitoring for their own pet causes.

Copyleaks has a great writeup of this, and I highly encourage you read it for more of the technical details. https://copyleaks.com/blog/grok-and-nonconsensual-image-manipulation

Many countries have laws prohibiting the distribution of CSAM and NCII. For example, in the US, 18 U.S.C. § 2252A arguably covers what happened on X, at least in relation to children. The EU’s Directive 2024/1385 on combating violence against women seems like it would similarly apply.

You might be wondering why I didn’t include every MEP. The EU Parliament consists of 720 members across 22 committees. I wanted to focus on a small number of members first, across the three committees who are primarily responsible for drafting and advancing these proposals. Mostly, I wanted to test a theory, write a bit of code, and not spend 3 days in data analysis hell.

Data Sources for voting records (please feel free to check my work)

Digital Services Act: https://howtheyvote.eu/votes/146649

Earlier CSAM Bill (Proposed in July): https://howtheyvote.eu/votes/177025

Combatting Violence Against Women and Domestic Violence: https://howtheyvote.eu/votes/168573

Protection of Minors Online: https://howtheyvote.eu/votes/181520

NB: The votes for the DSA occurred in April 2022, IIRC. So, technically, only around 11 of the current voting Council members voted for the DSA.

Really important piece Carey. It is funny how politicians will go on about breaking e2ee for the sake of child safety and yet seem to find difficulty in tackling the platforms enabling CSAM to be distributed 'out in the open'.

A few folks seem to be similarly outraged. Here's a great report by Dr. Paul Bouchaud of AI Forensics (shared by Raziye Buse Çetin - https://www.linkedin.com/pulse/when-platforms-dont-just-host-harm-generate-grok-on-x-%C3%A7etin-rkhce/?trackingId=d0IvDuz8RGGQTRV7K%2B4Idw%3D%3D) showing that this is a far more wide-spread problem than I realized: https://www.linkedin.com/posts/aiforensics_grok-ai-images-activity-7414252008783646720-j7AO/

By analyzing over 20k images generated by Grok and 50k requests made by users we found that:

- 53% of images generated by @Grok contained individuals in minimal attire, with 81% presenting as women

- 2% depicted persons appearing to be 18 years old or younger, as classified by Google's Gemini

- 6% depicted public figures, around one-third political

- Nazi and ISIS propaganda material was generated by @Grok