Privacy Disasters: Microsoft Keeps Stepping in It

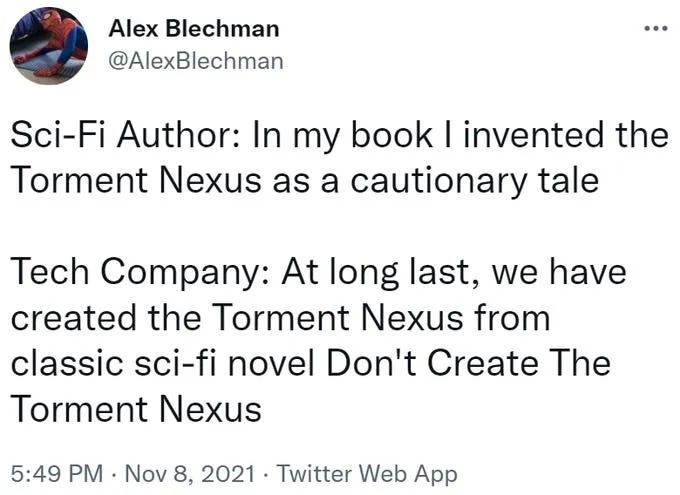

Microsoft releases the Torment Nexus v. 2.

As the old chestnut goes, “Insanity: doing the same thing over and over again and expecting different results.”1

The repeat offender doing the same thing over and over again in this case, is Microsoft. And the repeat offense? Their privacy-invading Recall application, currently rolling out on select computers with Windows 11 installed. Or as folks in my line of work like to say: a bit of technosolutionism in search of a problem that nobody has.

But first, a kitten:

Some of you may “recall” that I’ve talked about Microsoft’s early demo of its always-on by default, dystopian life-logging application. At the time I cynically referred to it as the Torment Nexus v.1.

Recall’s announcement freaked many people out, especially after security researchers discovered that Recall was constantly capturing and OCRing screenshots, and storing everything in plaintext on a local database that was easily accessible and instantly retrievable. Oh, and Microsoft CoPilot was involved, as a bonus fail.

This news, unsurprisingly, also attracted the attention of regulators in the EU, including the Information Commissioner’s Office in the UK and the Data Protection Commission here in Ireland, though nothing much came of it, beyond a few sternly-written press releases.

I had … many thoughts.

As designed at the time, Recall had many problems. Among them was the fact that everyone at Microsoft seem to have no idea about the core principles of data protection, including:

Transparency & Fairness: At first, Microsoft planned to have Recall on by default for all CoPilot+ laptops, with an initial popup on initial install of Windows 11. That meant that the only person who had any awareness of Recall’s existence was the person who upgraded to Windows 11. Microsoft wasn’t being transparent and clear to all potential users, which meant that users couldn’t make an informed choice. In addition to being a legal problem, it also created profound impacts on vulnerable groups, like abuse victims, children, and even employees who may not have been aware that Recall was monitoring their every digital step.

Data Minimization: Because Recall was designed to record everything, there were virtually no limits on data collection. Microsoft did build mechanisms to delete individual screenshots or pause Recall temporarily, and to selectively block recording for certain applications. However, based on early testing by security researchers, setting limits on Microsoft’s collection of data proved to be challenging and tedious. Disabling or blocking access was buried in the bowels of user settings, and users had to delete each screenshot individually. Also re-enabling Recall was only an inadvertent button-smash away. Clearly, Microsoft was collecting more information than they needed.

Storage Limitation: Recall’s storage capability was also virtually limitless — bounded only by the size of the user’s hard disk, unless they did the tedious work of manually deleting. This was disproportionate, and users had no way to set their own retention period. How many people really need to recall that one website they visited 3 years ago?

At the time, I noted that it seemed very obvious that nobody at Microsoft HQ had bothered to even consult with their privacy team, much less do even a rudimentary privacy impact or data protection impact assessment2— so I decided out of the goodness of my heart to do one for them.

Right. So that was May. Microsoft quickly recalled the Recall feature in early June, and promised to do better. To their credit, they did make some changes. For example, Recall (aka, Torment Nexus v2) is no longer on by default, though it’s still possible to have it on and users be unaware — for example, if IT turns it on by default at your workplace. Encryption and overall security was also substantially improved, and Microsoft made it easier for users to set their own retention limits and delete ranges of data, instead of individual screenshots. They also claimed that

Sensitive content filtering is on by default and helps reduce passwords, national ID numbers and credit card numbers from being stored in Recall. Recall leverages the libraries that power Microsoft’s Purview information protection product, which is deployed in enterprises globally.

Except that … no it’s not.

Microsoft: Still Clueless About That Whole Personal Data Thing

Predictably, not long after Recall Pt 2: Always Watching U was announced, security researchers started noticing that this last claim wasn’t quite right. Or at least, not consistently in place. The fine folks at Tom’s Hardware observed that despite the sensitive content filtering claim being on by default and operational, it really wasn’t.

When I entered a credit card number and a random username / password into a Windows Notepad window, Recall captured it, despite the fact that I had text such as “Capital One Visa” right next to the numbers. Similarly, when I filled out a loan application PDF in Microsoft Edge, entering a social security number, name and DOB, Recall captured that. Note that all info in these screenshots is made up, but I also tested with an actual credit card number of mine and the results were the same.

I also created my own HTML page with a web form that said, explicitly, “enter your credit card number below.” The form had fields for Credit card type, number, CVC and expiration date. I thought this might trigger Recall to block it, but the software captured an image of my form filled out, complete with the credit card data.

And if Microsoft is simply filtering by regular expressions and only for websites, it’s going to miss loads of personal and sensitive data that people likely don’t want recorded or stored for posterity. Or future law enforcement review.

Have a kink? Recall will helpfully take screenshots of your porn habits and make it searchable, no matter how NSFW it is. That will make for some interesting dinner conversations or HR pull-asides.

Having a miscarriage or need an abortion? Texas will be overjoyed to know about your search history and the friends you chatted with on Signal chat, WhatsApp, or other secure service.

Are you an activist? The authorities now have your entire digital record at their disposal. Who needs secure storage or encryption when it’s just there for the taking.3

Slacking off at work? Your employer now has ready access every time-waster, Google chat, Facebook like, and aforementioned porn search. Now they’ll have all the evidence necessary to shitcan you, with screenshots.

Need to flee an abusive relationship or home environment? Microsoft will helpfully let your abuser know, no matter how hard you try to hide your tracks.

Why it Matters

Here’s the deal. These failures indicate that Microsoft either doesn’t understand or care about core concepts that underpin data protection and privacy rights. And that’s one of the problems I’m trying to solve.

Personal data isn’t just a SSN or credit card number. It’s broader than just what you search for online. Your personal data (or PII), defines who you are as an individual. It helps others to identify you and distinguish you from everyone else in the crowd.

With Recall, Microsoft is now creating a digital record of everything that makes you you, centralizing it, and visually displaying it like a digital visual diary for anyone who can get access to your Windows Hello password. It’s an always-on surveillance system that you may not even know is running. Sure, it’s just on your computer for now, and it’s probably storing things locally, but much like CCTV and facial recognition, the scope will broaden the more desensitized we become. And I suspect that Microsoft will move the goalposts eventually and start harvesting all that juicy personal data for training AI models, helping you boost your ‘productivity’, or ‘improving’ your Windows experience.

So many engineers are jumping over themselves to develop tools in search of a problem to solve—it’s why I will probably end up writing about Privacy Disasters until I’m dead, the planet overheats, or we’ve obliterated what limited privacy and digital autonomy and rights we have left.

These engineers might mean well, but they’re completely oblivious to the problems these tools create. It’s not just about legal compliance (which this still totally is not). It’s about user trust, our right to be let alone, to have some parts of ourselves hidden from view.

That’s a problem, and it’s going to become even more obvious (even for the Silicon Valley libertarian set) as western democracies continue to crumblel, replaced by totalitarian and authoritarian regimes.

I don’t have connections in Washington or Brussels. I can’t influence policymakers or regulators. I definitely can’t seem to convince Big Tech to think about their life choices. But I might be able to convince one or two of my readers who do have those connections to pass these words along.

To take a beat. And think about why we’re ignoring yet another problem that’s staring us all in the face. And how we might solve it together.

I am not a notable quotes gal, but apparently, this draws people in, and for the sake of experimentation, I’m going to try this for awhile, until I get sick of it, or the newsletter gets too cliche and y’all tell me to stop.

While I’m speculating that the privacy team wasn’t involved, it’s very clear that there weren’t enough stakeholders in the room. The Verge reported that Recall was developed in secret at Microsoft, and did not implement a set of org-wide security objectives known as the “Secure Future Initiative.” Recall wasn’t even dogfooded to Windows Insider before the announcement was made.

Someone will probably point out that Microsoft encrypts the images and text, but accessing that information is trivially simple provided you know the user’s Windows Hello pin, or have access to their biometrics.

As