In Defense of Smart Weirdos: What I Learned at an AI Safety Law-a-Thon

I forgot just how much I miss that kind of crazy nerd energy.

This past weekend, I had the privilege of attending the AI-Safety Law-a-Thon in London. The event, which was organized and expertly run by Katalina Hernández (of Stress-Testing Reality Limited ) and Kabir Kumar (of ai-plans), was part hackathon, part brainstorming session, part role-playing game, and part law school practicum. I didn’t know exactly what to expect, and I mostly went because Katalina is a friend and she asked me to. Still, I’m glad I did.

The two-day event asked participants to game out the legal and technical implications in relation to a set of hypothetical AI bad-time scenarios. We were asked to analyze and argue how responsibility & liability should be assigned to each of the players, and identify risks and gaps in relation to the AI models and systems themselves. Groups consisted of lawyers, who came with knowledge of the law, how to read contractual terms and parse regulatory frameworks like the EU AI Act and Product Liability Directive, and AI Safety technical experts who had expertise in AI risk assessments, identifying rare-event failures, and importantly, grounding all the lawyers on the limits of ‘solving’ wicked technical problems.

I’ll preface that I know very little about ‘AI Safety’ or it’s sibling ‘AI Alignment’ as these terms are commonly defined, particularly by those in the rationalist/effective altruism (EA) community, who make up a large percentage of the people in this space. Here’s the Wikipedia definition for AI Safety those who have absolutely no idea what I’m on about:

AI safety is an interdisciplinary field focused on preventing accidents, misuse, or other harmful consequences arising from artificial intelligence (AI) systems. It encompasses AI alignment (which aims to ensure AI systems behave as intended), monitoring AI systems for risks, and enhancing their robustness. The field is particularly concerned with existential risks posed by advanced AI models

and here’s the definition of AI Alignment:

In the field of artificial intelligence (AI), alignment aims to steer AI systems toward a person’s or group’s intended goals, preferences, or ethical principles. An AI system is considered aligned if it advances the intended objectives. A misaligned AI system pursues unintended objectives.

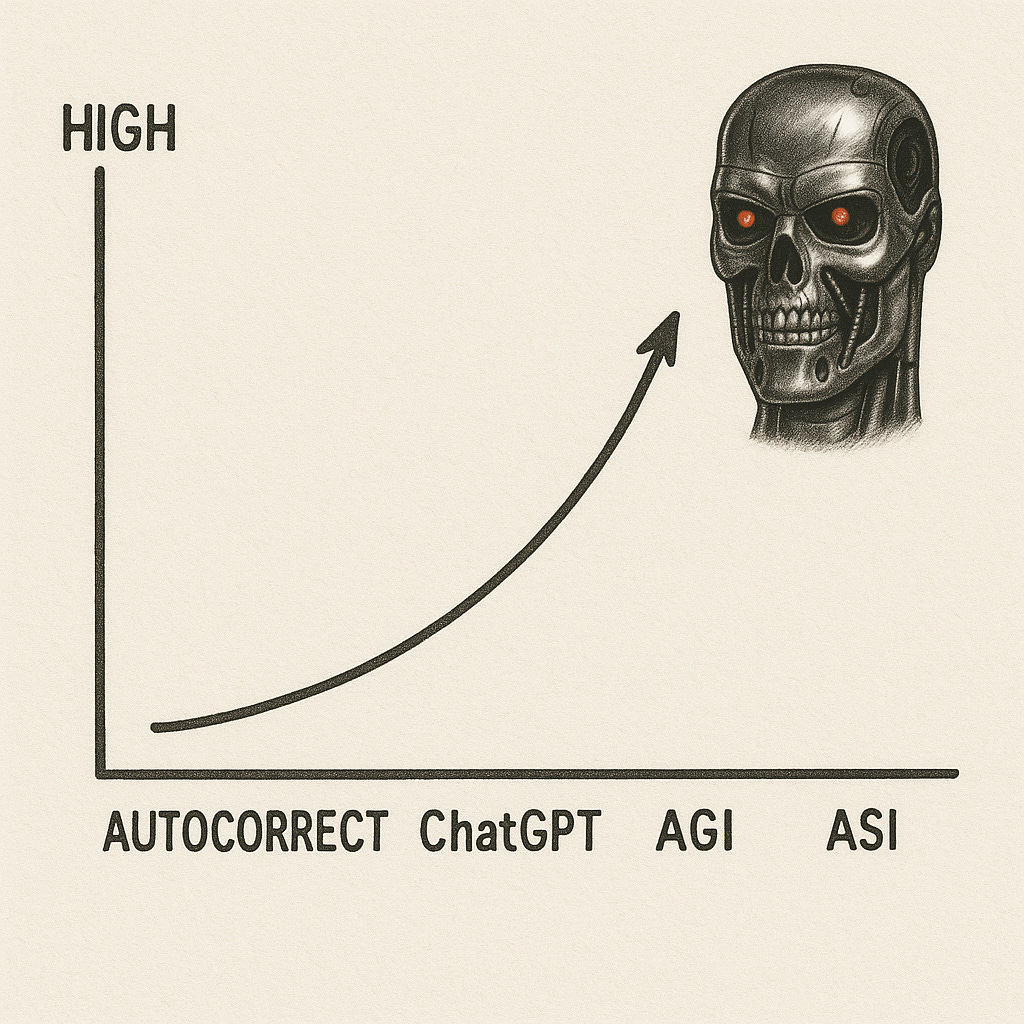

At the simplest level, both fields are focused on keeping complex AI systems — especially artificial general intelligence (AGI) and artificial super intelligence (ASI) systems — from turning us all into paperclips or otherwise killing off humanity.

Even though AGI/ASI do not exist yet, many AI Safety/Alignment principles can be applied to ‘narrow or weak AI’ systems and uses like chatbots, medical AI diagnostic systems, biometric surveillance systems, and machine learning algorithms used for automated decision-making. For example, thinking about risks and biases, detecting malicious uses, monitoring, and ensuring transparency are equally applicable to address questions of whether it’s a good idea to use ChatGPT to decide on whether a particular person should be hired for a job or given an apartment, as it is to preventing the rise of the Terminator.

Thankfully, for the lawyers’ sanity, the scenarios we discussed at the law-a-thon were all quite realistic and practical, well within the limits of the types of narrow AI risks many readers of this blog might already be dealing with today.1 There were quite a few questions around liability, causality, and burden-shifting, and much contract reading. Most of the scenarios asked for us to play the lawyer/expert team for say, a downstream SaaS provider or customer, trying to shift the burden to a general purpose AI model provider like OpenAI.

I felt a bit sad for the technical folks, in that they weren’t given access to say, training data, model weights, or detailed product evaluations (they did have studies, some higher level evaluation documentation, and model cards), which meant that they weren’t really able to engage at a technical level as much as they clearly wanted to. It was all very document-heavy, but in reality, this is probably also how many AI Safety researchers have to get by. Good luck getting the likes of OpenAI, Anthropic, or Google to say, share their model weights, training corpus, or technical implementation details.

I also found Day 2 to be much more energizing than Day 1. On Day 1, we were put into small groups (usually 2-4 people) and asked to puzzle over one of five different scenarios, delivering a final report by EOD. This was fine, but I think it missed a lot of opportunity for broader conversation and learning. I so consumed about formatting, coherence, and having sufficient citations for the final project, that I almost completely ignored chatting with others in the room. Even though my team was great, it still felt like a school group project, and I hate group projects.

But on Day 2, Katalina & Kabir flipped the script, and instead of having a bunch of output-driven final reports, we were broken up into two largeish groups and asked to basically brainstorm. And fam, let me tell you, I loved the hell out of Day 2.

Why Weirdo Law-a-Thons/Hackathons Rock

Day 2 was energizing. It was exciting. It was full of electricity, exploration and a dozen other e-based superlatives. In short, it was exactly what I needed from a hackathon.

Having a bigger team meant more ideas, more reference frames, and more dialogue. Having no fixed output requirements meant more time to just throw out crazy ideas and draw messy diagrams on whiteboards. And most importantly, it meant we all learned way more about others’ respective domains and how to approach AI safety problems than we would have otherwise.

I miss these types of events. It has been an age since I was in a room with a lot of differently smart weirdos. And everyone in that room was a smart weirdo in the best way imaginable. Everybody had a story and a unique perspective, even those who didn’t say very much. Each person had a different way of describing the elephant, and so many of those ways were fascinating to me.

Importantly, everyone in the room was genuinely curious, and I really miss that vibe. For all of Dublin’s charms, I miss two things about California and the Bay Area specifically: 1) the weather; and 2) the sheer agglomeration of people who ask the kinds of crazy bananas questions that get you thinking about a problem completely differently.2 The kind of questions where your response is initially WTAF, followed by … hmmm… that’s interesting, but why?

Now, I have many brilliant, inspiring friends, but they’re spread out all over the world. Likewise, most conferences or local events that I attend are dominated by people who are, to be blunt, high on credentials, influence, or networking skills and low on substance. It’s been nearly a decade since I’ve been at a big group event where so many folks just nerded out on like 15 different-but-tangentially-related themes at the same time. But this is exactly the energy I thrive in. If you have no idea what I’m talking about, think back to your university dorm-room days, but replace the stoner navel-gazing naïf philosophy majors with brilliant-but-cynical problem solvers who have actually been in the shit and know exactly why, where, and how the system or policy fails.

Part of this comes from just hanging out with people who focus on different things and being able to find commonality, but part of it, if I’m being totally honest, comes down to the fact that a good percentage of the attendees at the Law-a-Thon were rationalist/EAs and many of them are probably a bit on the spectrum.

I am neither, but I tend to enjoy being around those types of people from time to time, primarily because the rationalist/EA crowd, to paraphrase something Husbot told me once, are generally used to people thinking they’re a bit out there/crazy/fringe, and have mostly come to terms with that. Still, they feel so strongly and passionately about the importance of their particular position (whether it’s putting a pause on superintelligent AI, longtermism and global catastrophic risk, or shrimp welfare) that they’re committed to getting the rest of us to come around. And sometimes, they’re even successful!

Also, they tend to put on crazy events like AI-Law-A-Thons and setting up EA Hotels and rationalist group homes, whereas I get tired by just thinking about organizing anything larger than a six-person dinner party.

Look, this isn’t a call for people to become rationalists/EAs. That’s like me telling people to go find Jesus or join the Hare Krishnas. Some of the rationalists/EAs I’ve met are absolute dicks, and sometimes, and some have cringe, deeply fucked up, bat-shit crazy opinions. All I’m saying is that sometimes, with the more benign sorts at least, that vibe and unencumbered willingness to be out there is fun to be around. And I had fun at the AI Law Hack-a-Thon, and I think more lawyers, compliance folks, and policy wonks should move out of their comfort zones and do crazy shit like this, because my god, it all feels so boring right now.

Massive shout-out to the folks I met, in particular Gurkenglas, Abel, Kabir, the lovely folks from Tik-Tok, Chris, Demi & Esther. And of course Katalina, who had the energy to get this going.

I was doubly impressed that the AI Safety folks kept it together enough to only mention If Anyone Builds It, Everyone Dies once.

I used to say three things: 1) the weather, 2) the crazy smart vibe, and 3) avocados & good Mexican food, but Ireland has really stepped up in the latter department.

Great piece and missing London for this very reason :)

As one of the technical, ea, rationalist people at the event (but not one of those who worked with you), this was a joy to read! Best of luck finding more events and people like this in the future