Can LLMs Unlearn? Part 1: Predictable Law, Unpredictable Machines

Part 1 in a multi-part series on the problems of deterministic laws in an increasingly non-deterministic world.

Heads up- I’m resharing some of my bangers, because my readership has grown considerably since. You might like this article (the first in a series) where I dive into machine unlearning. You might also like this article, where I compare LLMs to cakes:

For those of you who are following along, this has been a many months-long quest to discover whether or not large language model (LLM) providers can comply with data subject rights like the right to erasure & the right to be forgotten (RTBF) and whether LLMs generally can unlearn. To make life simpler for everyone, I’ve created some breadcrumbs.

In Part 1, I lay out the problem: LLMs are not databases, and yet our laws expect them to behave like databases. Data protection laws in particular, assume that the things that hold true for databases should be as easy to do for a non-deterministic systems like LLMs. I begin to explain why this is hard.

In Part 2, I start to dig into one reason this is so hard —what I call the ‘underpants gnomes/Which Napoleon’ problem. I ask the key question of how to identify a specific individual within a system in order to erase or correct information about that individual. I also touch on how applying certain tools during the initial training process can help, such as Named Entity Resolution, Named Entity Disambiguation, Linking & Knowledge Graphs, but how that’s also really costly which is probably why no one is doing it.And Part 2 here:

Part 2.5 discusses my ongoing efforts to have OpenAI (and less successfully, Perplexity) forget my data. OpenAI kinda tries, but it never actually erases data about me. It just applies a rather blunt-force hack to the problem. I discuss whether this is sufficient and potential harms from this approach:

In Part 3, I start to explore the research on machine training and unlearning. I define what machine unlearning / forgetting is, and isn’t, and address the techniques researchers have discovered. I also explain why the gold-standard approach of starting with perfect data or full retraining doesn’t scale well, and why other exact unlearning methods are also difficult to do in practice:

Part 4 continues the technical discussion. In this section I touch on approximate unlearning methods, their strengths and limitations. I also discuss adjacent unlearning techniques like differentially private unlearning and federated unlearning:

In Part 5, I will cover the final bits, including suppression methods & guardrails — essentially what OpenAI does to ‘comply’ with data protection laws like the GDPR. I will also close with my thoughts on the state of the law, and how to reconcile the incongruence between technical complexity and legal certainty.

You are here: Part 1 … Predictable Laws, Unpredictable Machines

Apologies for the delay between posts. It turns out that socializing non-stop for two days straight saps every iota of energy right out of me. Still, I don’t regret attending the IAPP AI Governance Global event in Brussels — I met so many amazing people, dished about data protection, and learned a lot, mostly about pain points and how we’re all blindly trying to identify and describe the elephant that is AI regulatory policy.1

But even before the conference, I’ve had AI on the brain. Between the release of the EDPB’s ChatGPT Taskforce report, and a recent query by Jared Browne on why OpenAI can’t correct personal data, I’ve been thinking a lot about the legal implications of large language models:

As these types of questions usually do, I started thinking about other, related questions, like deletion, accuracy, and technical impossibility. I also phoned a lot of friends about how compliance could be done at a technical level, which yielded over 100 comments, tons of insight, and a realization that like so many things, everything is more fractally complex than most of us realize. And because my brain is full of holes, I started writing down what I learned (which will be part of a future post).

But before I dig into the how, I wanted to touch on the bigger problem of applying deterministic laws to non-deterministic systems.

LLMs How do They Work?

Most of us know what a LLM is, and so I will skip some of the basics. But for purposes of this piece, it’s good to remember that at its core, an LLM is:

a sophisticated pattern-completion program

trained on gobs of data

in order to generate coherent text

based on statistical relationships.

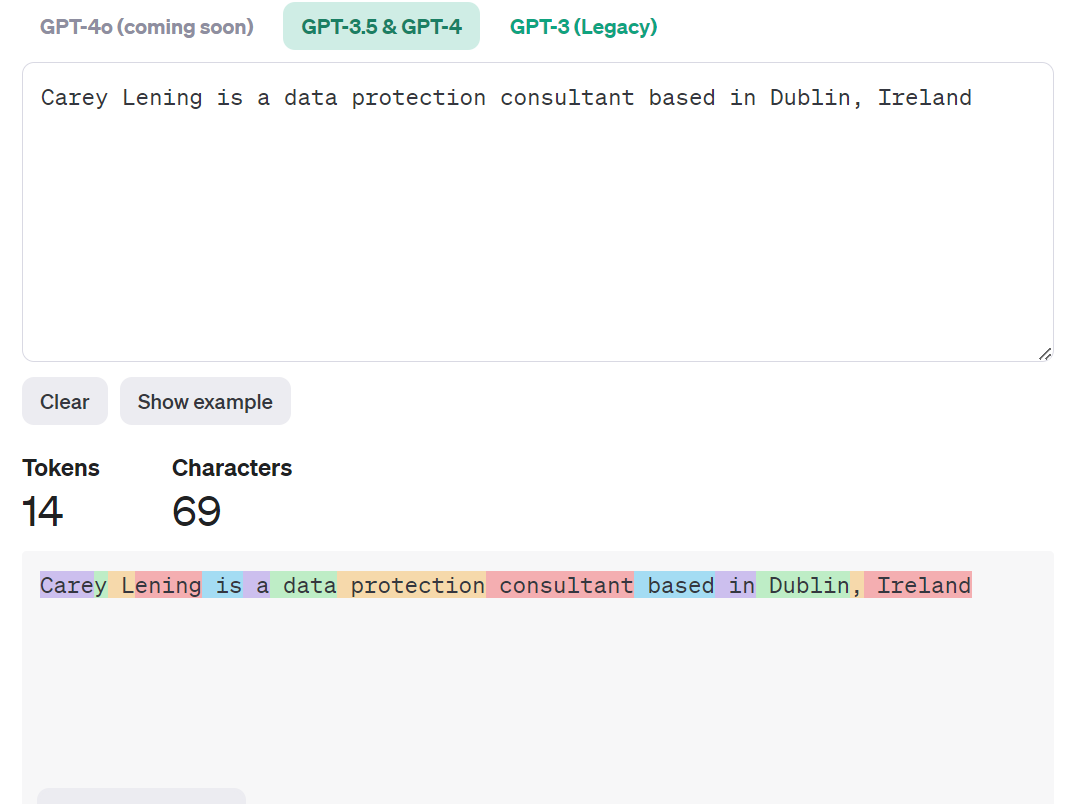

Large language models use training data (like the Common Crawl) to learn what words are most likely to appear next to other words.2 It then assigns words (or parts of words, word stems, or other characters) to a token. For example, here’s how OpenAI breaks down the phrase “Carey Lening is a data protection consultant based in Dublin, Ireland:

You might notice that tokens don’t always translate to words. Carey for example, is a combo of ‘Care’ and ‘y’ which makes some sense – ‘Care’ is a common word, and it’s less common to append something like -y, -s, or -t) to that. More common names like Bob or David don’t do that stemming thing because they’re already common.

After initial training, an LLM usually needs further training, because its initial word associations are frequently hot garbage. This is most commonly done via supervised fine-tuning by humans or through reinforcement learning from human feedback (RLHF), where humans either supply correct question-answer pairs, or reward ‘correct’ model outputs generated by the LLM itself.3 The fine-tuning process may also include setting limiting instructions, weights & temperatures, filters, or providing guidance on what types of answers should, and should not be provided by the LLM. The ‘what not to include’ part is sometimes referred to as suppression.

What’s really important to remember though, is that even after all that human involvement and training, an LLM doesn’t know or understand what it’s saying. As Emily Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell4 reminded us:

Contrary to how it may seem when we observe its output, an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.

Since a model is only as good as the source data it’s trained on and its fine-tuning, if the training data is junk, biased, or contains illegal materials, the model will be influenced by those biases, errors, and junk. But even if the data is quality stuff, sometimes the outputs might nonetheless be entirely wrong or incorrect in context. Human effort takes time, and the world is a bad place. No amount of supervised fine-tuning or RLHF can catch everything. And that doesn’t include all the other reasons related to the model architecture, questionable training processes, task dependency, or intentionally-motivated attacks that can muck things up.

The Complexities of Non-Determinism

Under the GDPR, and other data protection laws, individuals often have numerous rights related to their personal data, including access rights, the right to correct (rectify) inaccurate data, or to have personal data deleted. These rights aren’t absolute, but they are usually quite broad.

The thing is, these rights have been codified when data consisted of stuff about people located on servers, in a structured database, or stored as part of a “filing system”. To borrow a phrase from computer science, filing systems act ‘deterministically’ – that is, they will produce the same output (or file, or query response) given the same input.5 In other words, that funny cat meme you saved in C;/Downloads as cat.jpg will be the same cat picture tomorrow. And you’ll be able to retrieve it today, tomorrow, or next year provided nothing changes.

Privacy and data protection laws, including the GDPR, were crafted with determinism in mind (even though they don’t specifically call this out). This is why the GDPR’s material scope is limited to the processing of personal data ‘wholly or partly by automated means,’ and to non-automated processing where the data forms ‘part of a filing system’ or is intended to do so.6 If it weren’t, we’d all need to spout privacy notices every time we talked shit about our colleagues at the big office party, or a comedian roasted someone as part of their set. And don’t even get me started on the lawful basis question.7

But things get way more tricky when it comes to LLMs, precisely because the same input doesn’t guarantee the same output. Their results—the probabilities of those token pairs—may change depending on the specific word choice in the prompt given, model versioning, math, or tons of other factors not entirely understood. Searching for cat.jpg on ChatGPT won’t give you the same cat.jpg.

It gives you something more like this:

In other words, LLMs are non-deterministic systems. Or as someone more clever than me noted, an LLM is like baking a cake. It's easy to add the ingredients in the first place (training, fine-tuning), but taking them after the cake has been baked? Good luck with that.

Importantly, unlike a filing system, there usually isn’t a single source file or even a set of files the model is calling on when it’s generating text, summarizing a paper, or creating an image.8 That means it may be hard or even impossible to point to how the LLM arrived at an incorrect, defamatory, infringing, or illegal result. Sure, the result might be influenced by some underlying source(s), but it also might include completely benign details from other training data where the token math led to a ‘better’ match.

For example, if I ask ‘Who is Bob Smith’, the LLM doesn’t know if I’m asking about Bob Smith at 333 Cherry Lane, Dublin, Ohio, or Bob Smith in Dublin, Ireland. Hell, even if I specify which Bob Smith, it still might conflate the two Bobs, even if the underlying training data is accurate about each respective Bob. Remember: LLMs lack context. They don’t know or understand what they’re saying. How exactly do you correct that? For which Bob? And what happens when Bob Smith refers to any number of people? Which ingredient do you remove from the cake?

Here’s an example in action: I asked OpenAI GPT-4o and GPT-4 for details about “Carey Lening” and received two wildly and contradictory results. Just like the cat meme.

So, to Jared’s question, I ask: How exactly do you correct, or rectify that? Which answer gets corrected? And what if it changes tomorrow as someone else writes a similar(ish) query about Carey Lening?

Unforgetting Machines

Given that brief background, it should be easier now to understand why something like rectification/correction is far from simple or binary. To quotesage privacy elder Michelle Dennedy, this represents a ‘wicked problem’.

Okay, you might be asking, what about deletion? After all, OpenAI claims that while it cannot correct inaccurate data, it can delete it:

But this isn’t entirely true either. You’ll note that what OpenAI (and likely other large language models like Perplexity.ai, Alphabet’s Gemini, and Anthropic’s Claude) actually claim is more akin to suppression, not true deletion.

Deletion (and rectification, access, objection, hell, even accuracy and data minimization) are all wicked problems because when it comes to LLM-generated outputs, there usually isn’t a single thing to delete, correct, access, or object to, because the outputs themselves are based on the probability of certain words/tokens, and that probability is independent of context or meaning. What we have is the same baked cake problem I mentioned earlier.

Yes, you can delete information related to ‘Bob Smith’ from the training data. OpenAI, Google, and Anthropic make such an option available to individuals who request it, at least if you’re in certain jurisdictions. But it’s likely to be all Bob Smiths, and importantly, it’s most likely to be suppression (akin to what Google does for a right to be forgotten request), not actual deletion.

In Part 2, I’ll dig into all the varied methods of what is known in the literature as machine unlearning — the respective utility, challenges, and feasibility of each, and possibly, why laws around data protection and even the new EU AI Act probably need to address the technical complexity of applying deterministic law to non-deterministic systems.

Because it isn’t just AI we’re talking about here. Non-determinism is going to bite our legal system in the ass in a bunch of different ways.

—

Massive thanks to the following folks (and so many others) who helped guide some of the questions — and answers — in this post.

Jared Browne, Florian Prem, Kevin Keller, Jeff Jockisch, Debbie Reynolds, Igor Barshteyn, Michael Bommarito, Axel Coustere, Mark Edmondson, Nicos Kekchidis, Pascal Hertzscholdt, Ken Liu, Devansh Devansh, Sairam Sundaresan

To badly paraphrase Shoshana Rosenberg, who was describing said elephant in a far more positive light.

Also, I’d hoped to have this post out before I left, and most of it was written, which is a nice change for me. Alas, like so many of my best intentions, scheduling, shiny objects, and various other life things got in the way. So it’s about a week late. Autant pour moi.

That text rarely comes as-is — and is itself cleansed and pre-processed to remove noise, clean up formatting issues, and toss the obviously bad/illegal/irrelevant info.

This is a painfully brief explanation, and both of these processes contain additional mechanisms to fine-tune models. You can read more here: https://www.turing.com/resources/finetuning-large-language-models

Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell. 2021. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? . In Conference on Fairness, Accountability, and Transparency (FAccT ’21), March 3–10, 2021, Virtual Event, Canada. ACM, New York, NY, USA, 14 pages. https://doi.org/10.1145/3442188.3445922.

I recognize that where the files logically exist in the file system may not be completely deterministic, but the algorithms themselves perform deterministically.

Article 2(1) GDPR.

I’m not saying that they couldn’t do these things lawfully, just that it would add a lot of extra overhead to do it in a data protection-compliant way.

I don’t want to say never because some asshole on the internet will ‘Well actually…’ me and I just don’t want to hear it.

Glad you liked the piece, and clearly you're passionate on the subject.

I just read a contra view to your own -- Adam Unikowsky advocates for letting LLM/AI judges decide cases... He presents his case well, and it's worth a read.

https://adamunikowsky.substack.com/p/in-ai-we-trust

Always fun to read your astute and thought provoking posts. It feels like the hype (and the marketing that led to it), around GenAI is what’s gotten us to this point. While I doubt they footnoted results in their early days, the cautionary note they include now in “For this reason, you should not rely on the factual accuracy of output from our models.”, makes absolute sense in that nothing coming out of these models should be relied upon even when the answer looks or is right (since “even broken clocks are right twice a day” ;). The fact is (at least in the U.S.) that even wrong information is protected speech. Sure, defamatory speech can be a thornier issue, but it’s only when we decide to treat LLMs as some sort of oracle or tool to get true answers, that things run amok. As a toy or novel and interesting technology, or frankly as a parlor trick, they’re fun, but as soon as companies start building these into real systems that we depend on or need to place great trust in, is when we get into trouble. This is especially frustrating when we see applications like tax preparation software for example, provide these tools for people to get answers to real taxation issues only to find out later that the answers they depended on were wrong. Even with a disclaimer, there’s no reason the tool should be made available at all given the lack of reliability in such contexts. Solving for how to make these tools compliant with regulations, or even worse, how to properly regulate these going forward seems like a fool’s errand as it brings up tons of complexity for something that doesn’t work yet all in the continued hope that they be held as truth-telling systems. What we should regulate are the companies that embed these services in their applications and we should hold them responsible for any damages. The fact that they just used an LLM and it’s not their fault is hogwash, if they chose to be irresponsible about placing this tech in their applications despite it’s obvious shortcomings, that should be viewed as negligence. Software companies have long sought to abdicate responsibility for their software with as-is provisos or in the case of DAOs by calling themselves decentralized to remove the accountability requirements of a centralized organization. Enough is enough, the riches made by companies deploying these technologies at the expense of their users needs to be reconciled with the damage they are also perpetrating through a lack of responsibility. In many cases however, it’s less the pure software company I’d be looking to hold responsible and more the application developers and providers (frequently in other industries since software is eating the world ;), that let end-users interact with these buggy or just plain defective technologies. It’s them making the decision that these systems are “good enough” when in fact they are not. Sure, we will hear the cries that this will stunt innovation, but that’s just excuses to enable these nascent companies to generate revenues at the expense of the rest of us.